7491:. A properly conducted regression analysis will include an assessment of how well the assumed form is matched by the observed data, but it can only do so within the range of values of the independent variables actually available. This means that any extrapolation is particularly reliant on the assumptions being made about the structural form of the regression relationship. If this knowledge includes the fact that the dependent variable cannot go outside a certain range of values, this can be made use of in selecting the model – even if the observed dataset has no values particularly near such bounds. The implications of this step of choosing an appropriate functional form for the regression can be great when extrapolation is considered. At a minimum, it can ensure that any extrapolation arising from a fitted model is "realistic" (or in accord with what is known).

12635:

10324:

7779:

417:

13910:

12621:

9733:

5105:

31:

9713:

7425:

3790:

13934:

12659:

13922:

12647:

6024:

7380:(or polyserial correlations) between the categorical variables. Such procedures differ in the assumptions made about the distribution of the variables in the population. If the variable is positive with low values and represents the repetition of the occurrence of an event, then count models like the

7766:

applications and on some calculators. While many statistical software packages can perform various types of nonparametric and robust regression, these methods are less standardized. Different software packages implement different methods, and a method with a given name may be implemented differently

1533:

in 1809. Legendre and Gauss both applied the method to the problem of determining, from astronomical observations, the orbits of bodies about the Sun (mostly comets, but also later the then newly discovered minor planets). Gauss published a further development of the theory of least squares in 1821,

1508:

between the independent and dependent variables. Importantly, regressions by themselves only reveal relationships between a dependent variable and a collection of independent variables in a fixed dataset. To use regressions for prediction or to infer causal relationships, respectively, a researcher

7304:

are sometimes more difficult to interpret if the model's assumptions are violated. For example, if the error term does not have a normal distribution, in small samples the estimated parameters will not follow normal distributions and complicate inference. With relatively large samples, however, a

3450:

5820:

7428:

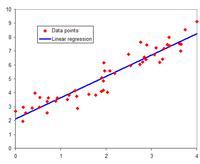

In the middle, the interpolated straight line represents the best balance between the points above and below this line. The dotted lines represent the two extreme lines. The first curves represent the estimated values. The outer curves represent a prediction for a new

5812:

6707:

5266:

4009:

in the class of linear unbiased estimators. Practitioners have developed a variety of methods to maintain some or all of these desirable properties in real-world settings, because these classical assumptions are unlikely to hold exactly. For example, modeling

1509:

must carefully justify why existing relationships have predictive power for a new context or why a relationship between two variables has a causal interpretation. The latter is especially important when researchers hope to estimate causal relationships using

7467:. Performing extrapolation relies strongly on the regression assumptions. The further the extrapolation goes outside the data, the more room there is for the model to fail due to differences between the assumptions and the sample data or the true values.

4560:

3250:

6210:

6515:

3842:

By itself, a regression is simply a calculation using the data. In order to interpret the output of regression as a meaningful statistical quantity that measures real-world relationships, researchers often rely on a number of classical

4822:

7247:

4383:

6785:

5352:

7592:

is the number of observations needed to reach the desired precision if the model had only one independent variable. For example, a researcher is building a linear regression model using a dataset that contains 1000 patients

6019:{\displaystyle {\hat {\sigma }}_{\beta _{0}}={\hat {\sigma }}_{\varepsilon }{\sqrt {{\frac {1}{n}}+{\frac {{\bar {x}}^{2}}{\sum (x_{i}-{\bar {x}})^{2}}}}}={\hat {\sigma }}_{\beta _{1}}{\sqrt {\frac {\sum x_{i}^{2}}{n}}}.}

3122:

3255:

7331:

The response variable may be non-continuous ("limited" to lie on some subset of the real line). For binary (zero or one) variables, if analysis proceeds with least-squares linear regression, the model is called the

3010:

5533:

13720:

5099:

7476:

that represents the uncertainty may accompany the point prediction. Such intervals tend to expand rapidly as the values of the independent variable(s) moved outside the range covered by the observed data.

1595:, regression in which the predictor (independent variable) or response variables are curves, images, graphs, or other complex data objects, regression methods accommodating various types of missing data,

2255:

1545:

in the 19th century to describe a biological phenomenon. The phenomenon was that the heights of descendants of tall ancestors tend to regress down towards a normal average (a phenomenon also known as

7683:

2589:

2331:

5700:

2464:

6530:

5118:

4891:

7138:

6979:

5034:

2004:

3699:

2709:

4111:

is largely focused on developing techniques that allow researchers to make reasonable real-world conclusions in real-world settings, where classical assumptions do not hold exactly.

7499:

There are no generally agreed methods for relating the number of observations versus the number of independent variables in the model. One method conjectured by Good and Hardin is

7402:

When the model function is not linear in the parameters, the sum of squares must be minimized by an iterative procedure. This introduces many complications which are summarized in

2901:

1473:) of the dependent variable when the independent variables take on a given set of values. Less common forms of regression use slightly different procedures to estimate alternative

4728:

Returning our attention to the straight line case: Given a random sample from the population, we estimate the population parameters and obtain the sample linear regression model:

7015:

9779:

4927:

4703:

3912:

3774:

2813:

2618:

2513:

2083:

7296:

Interpretations of these diagnostic tests rest heavily on the model's assumptions. Although examination of the residuals can be used to invalidate a model, the results of a

4430:

2763:

2654:

3127:

7037:

6833:

6087:

4674:

9607:

6401:

6366:

5437:

5384:

4644:

4617:

4274:

4247:

7164:

7109:

7063:

4421:

12697:

6339:

7530:

5633:

3563:

6863:

6243:

3741:

3589:

2484:

2386:

2358:

1875:

1652:

1307:

6930:

5572:

4954:

4590:

4220:

4157:

4105:

4070:

4043:

3975:

3941:

2157:

2130:

2034:

1933:

1902:

1855:

1820:

1778:

1740:

1686:

1345:

7376:

type models may be used when the sample is not randomly selected from the population of interest. An alternative to such procedures is linear regression based on

5685:

5659:

3615:

3510:

3480:

3036:

6811:

7631:

7611:

7590:

7570:

7550:

7083:

6950:

6903:

6883:

6393:

6303:

6283:

6263:

6079:

5592:

5457:

5408:

4723:

4193:

3537:

2836:

2177:

2103:

1706:

4734:

7172:

4283:

1603:

methods for regression, regression in which the predictor variables are measured with error, regression with more predictor variables than observations, and

1573:

of the response variable is

Gaussian, but the joint distribution need not be. In this respect, Fisher's assumption is closer to Gauss's formulation of 1821.

7483:

However, this does not cover the full set of modeling errors that may be made: in particular, the assumption of a particular form for the relation between

1302:

6718:

5272:

9772:

1292:

13715:

8855:

8009:

6029:

Under the further assumption that the population error term is normally distributed, the researcher can use these estimated standard errors to create

13705:

3445:{\displaystyle \sum _{i}{\hat {e}}_{i}^{2}=\sum _{i}({\hat {Y}}_{i}-({\hat {\beta }}_{0}+{\hat {\beta }}_{1}X_{1i}+{\hat {\beta }}_{2}X_{2i}))^{2}=0}

9449:

7694:

Although the parameters of a regression model are usually estimated using the method of least squares, other methods which have been used include:

3041:

1133:

2515:

to distinguish the estimate from the true (unknown) parameter value that generated the data. Using this estimate, the researcher can then use the

2591:

for prediction or to assess the accuracy of the model in explaining the data. Whether the researcher is intrinsically interested in the estimate

2818:

It is important to note that there must be sufficient data to estimate a regression model. For example, suppose that a researcher has access to

12690:

11756:

9841:

9765:

1340:

2910:

12261:

7348:

model is a standard method of estimating a joint relationship between several binary dependent variables and some independent variables. For

4015:

5468:

1461:) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see

1297:

1148:

12411:

1785:

879:

13755:

12035:

10676:

9850:

3709:

be able to reconstruct any of the independent variables by adding and multiplying the remaining independent variables. As discussed in

1380:

1183:

8748:"The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation"

7767:

in different packages. Specialized regression software has been developed for use in fields such as survey analysis and neuroimaging.

12683:

5046:

7403:

5542:(MSE) of the regression. The denominator is the sample size reduced by the number of model parameters estimated from the same data,

11809:

9855:

447:

13788:

13760:

12800:

12248:

10288:

8965:

8606:

7844:

1259:

357:

2182:

13765:

13081:

8848:

8544:

808:

17:

8454:

13823:

13730:

8721:

8654:

8478:

8354:

8272:

8033:

7942:

7639:

5807:{\displaystyle {\hat {\sigma }}_{\beta _{1}}={\hat {\sigma }}_{\varepsilon }{\sqrt {\frac {1}{\sum (x_{i}-{\bar {x}})^{2}}}}}

2521:

2260:

1492:

Regression analysis is primarily used for two conceptually distinct purposes. First, regression analysis is widely used for

13813:

10671:

10371:

9823:

9638:

3993:

A handful of conditions are sufficient for the least-squares estimator to possess desirable properties: in particular, the

3807:

1317:

1080:

615:

347:

6702:{\displaystyle \sum _{i=1}^{n}\sum _{k=1}^{p}x_{ij}x_{ik}{\hat {\beta }}_{k}=\sum _{i=1}^{n}x_{ij}y_{i},\ j=1,\dots ,p.\,}

2391:

13770:

13334:

12922:

11275:

10423:

10183:

9739:

9290:

9027:

4565:

This is still linear regression; although the expression on the right hand side is quadratic in the independent variable

1789:

1335:

8370:

Fotheringham, AS; Wong, DWS (1 January 1991). "The modifiable areal unit problem in multivariate statistical analysis".

5462:

Under the assumption that the population error term has a constant variance, the estimate of that variance is given by:

5261:{\displaystyle {\widehat {\beta }}_{1}={\frac {\sum (x_{i}-{\bar {x}})(y_{i}-{\bar {y}})}{\sum (x_{i}-{\bar {x}})^{2}}}}

4080:

standard errors, among other techniques. When rows of data correspond to locations in space, the choice of how to model

12838:

10163:

9813:

7282:

5040:

4961:

4828:

1168:

1143:

1092:

8495:

13828:

13354:

12937:

12058:

11950:

10083:

9551:

9178:

8985:

8841:

8679:

8625:

8450:

3829:

1583:

Regression methods continue to be an area of active research. In recent decades, new methods have been developed for

1216:

1211:

864:

4834:

12663:

12236:

12110:

9889:

9506:

7849:

7813:

7114:

6955:

1580:

to calculate regressions. Before 1970, it sometimes took up to 24 hours to receive the result from one regression.

874:

512:

311:

4974:

1615:

In practice, researchers first select a model they would like to estimate and then use their chosen method (e.g.,

13471:

13099:

13076:

12294:

11955:

11700:

11071:

10661:

8739:

7932:

7854:

7264:

3618:

1941:

362:

300:

120:

95:

7738:

Distance metric learning, which is learned by the search of a meaningful distance metric in a given input space.

5043:, a set of simultaneous linear equations in the parameters, which are solved to yield the parameter estimators,

3624:

13926:

13745:

13695:

13507:

13404:

13165:

13066:

12979:

12345:

11557:

11364:

11253:

11211:

10125:

9693:

9633:

9231:

3811:

1577:

1373:

1269:

1033:

854:

222:

11285:

4388:

In multiple linear regression, there are several independent variables or functions of independent variables.

2663:

13938:

13578:

13349:

12588:

11547:

10450:

9226:

8915:

8808:

7839:

1453:) that most closely fits the data according to a specific mathematical criterion. For example, the method of

1244:

946:

722:

181:

2105:

must be specified. Sometimes the form of this function is based on knowledge about the relationship between

13960:

13443:

13170:

12735:

12139:

12088:

12073:

12063:

11932:

11804:

11771:

11597:

11552:

11382:

10311:

10211:

10201:

10120:

10065:

9668:

9065:

9022:

8975:

8970:

7748:

4072:. Correlated errors that exist within subsets of the data or follow specific patterns can be handled using

2841:

1482:

1201:

1138:

1048:

1026:

869:

859:

440:

13590:

13483:

13394:

13207:

13195:

13104:

12793:

12651:

12483:

12284:

12208:

11509:

11263:

10932:

10396:

10338:

10153:

9719:

9015:

8941:

8803:

8798:

6984:

5691:

3591:, then there does not generally exist a set of parameters that will perfectly fit the data. The quantity

1352:

1264:

1249:

710:

532:

383:

8631:

4896:

13660:

13585:

13490:

13026:

12368:

12340:

12335:

12083:

11842:

11748:

11728:

11636:

11347:

11165:

10648:

10520:

9833:

9343:

9278:

8879:

7702:

7317:

4555:{\displaystyle y_{i}=\beta _{0}+\beta _{1}x_{i}+\beta _{2}x_{i}^{2}+\varepsilon _{i},\ i=1,\dots ,n.\!}

2037:

1546:

1312:

1239:

989:

884:

672:

605:

565:

352:

321:

248:

4681:

3860:

3245:{\displaystyle {\hat {Y}}_{i}={\hat {\beta }}_{0}+{\hat {\beta }}_{1}X_{1i}+{\hat {\beta }}_{2}X_{2i}}

13782:

13777:

13645:

13344:

13051:

13009:

12973:

12880:

12100:

11868:

11589:

11514:

11443:

11372:

11292:

11280:

11150:

11138:

11131:

10839:

10560:

10178:

10005:

9969:

9938:

9744:

9602:

9241:

9072:

8895:

7714:

7369:

4011:

3750:

2776:

2766:

2594:

2489:

2159:

that does not rely on the data. If no such knowledge is available, a flexible or convenient form for

2046:

1592:

1366:

972:

740:

610:

342:

331:

295:

202:

7613:). If the researcher decides that five observations are needed to precisely define a straight line (

6205:{\displaystyle y_{i}=\beta _{1}x_{i1}+\beta _{2}x_{i2}+\cdots +\beta _{p}x_{ip}+\varepsilon _{i},\,}

2717:

2623:

13620:

13319:

13031:

12927:

12758:

12583:

12350:

12213:

11898:

11863:

11827:

11612:

11054:

10963:

10922:

10834:

10525:

10364:

10158:

10036:

10000:

9928:

9818:

9800:

9643:

8900:

7823:

7759:

7733:

7723:

7333:

7274:

6510:{\displaystyle \varepsilon _{i}=y_{i}-{\hat {\beta }}_{1}x_{i1}-\cdots -{\hat {\beta }}_{p}x_{ip}.}

4172:

4126:

4077:

3994:

1596:

1570:

1535:

1486:

994:

914:

837:

755:

585:

547:

542:

502:

497:

403:

274:

197:

90:

69:

7480:

For such reasons and others, some tend to say that it might be unwise to undertake extrapolation.

7020:

6816:

1485:) or estimate the conditional expectation across a broader collection of non-linear models (e.g.,

13891:

13818:

13650:

12492:

12105:

12045:

11982:

11620:

11604:

11342:

11204:

11194:

11044:

10958:

9899:

9688:

9673:

9326:

9321:

9221:

9089:

8870:

7899:

4965:

4649:

3800:

2712:

1522:

1466:

941:

790:

690:

517:

433:

326:

6344:

5413:

5360:

4622:

4595:

4252:

4225:

1619:) to estimate the parameters of that model. Regression models involve the following components:

13914:

13886:

13665:

13389:

12875:

12786:

12530:

12460:

12253:

12190:

11945:

11832:

10829:

10726:

10633:

10512:

10411:

10252:

10078:

9943:

9933:

9884:

9648:

9408:

9127:

9122:

8332:

7919:

7818:

7377:

7258:

7143:

7088:

7042:

4957:

4394:

4006:

3844:

3710:

3483:

2657:

2365:

1823:

1616:

1549:). For Galton, regression had only this biological meaning, but his work was later extended by

1454:

1121:

1097:

999:

760:

735:

695:

507:

290:

285:

227:

8529:

6308:

13871:

13655:

13190:

13091:

12984:

12833:

12740:

12725:

12555:

12497:

12440:

12266:

12159:

12068:

11794:

11678:

11537:

11529:

11419:

11411:

11226:

11122:

11100:

11059:

11024:

10991:

10937:

10912:

10867:

10806:

10766:

10568:

10391:

10333:

10293:

10257:

10242:

10193:

10137:

9964:

9678:

9663:

9628:

9316:

9216:

9084:

8289:

8205:"The goodness of fit of regression formulae, and the distribution of regression coefficients"

7974:

7869:

7793:

7729:

7502:

7306:

5600:

3542:

1526:

1075:

897:

849:

705:

620:

492:

378:

74:

35:

9546:

8714:

Data

Fitting and Uncertainty (A practical introduction to weighted least squares and beyond)

6838:

6218:

3716:

3568:

2469:

2371:

2343:

2085:

that most closely fits the data. To carry out regression analysis, the form of the function

1860:

1637:

13846:

13630:

13495:

13461:

13409:

13234:

13229:

13175:

13131:

13021:

12959:

12848:

12843:

12478:

12053:

12002:

11978:

11940:

11858:

11837:

11789:

11668:

11646:

11615:

11524:

11401:

11352:

11270:

11243:

11199:

11155:

10917:

10693:

10573:

10298:

10237:

10224:

10173:

10073:

9995:

9974:

9948:

9698:

9653:

9099:

9044:

8890:

8885:

7879:

7397:

7349:

7321:

6908:

6369:

6050:

6038:

5545:

4932:

4568:

4198:

4135:

4083:

4048:

4021:

4002:

3998:

3953:

3919:

3453:

2135:

2108:

2012:

1911:

1905:

1880:

1833:

1798:

1756:

1718:

1664:

1530:

1422:

1004:

954:

398:

388:

269:

237:

192:

171:

79:

8406:

Principles and

Procedures of Statistics with Special Reference to the Biological Sciences.

7983:, Firmin Didot, Paris, 1805. “Sur la Méthode des moindres quarrés” appears as an appendix.

8:

13856:

13851:

13625:

13424:

13249:

12858:

12625:

12550:

12473:

12154:

11918:

11911:

11873:

11761:

11466:

11332:

11327:

11317:

11309:

11127:

11088:

10978:

10968:

10877:

10656:

10612:

10530:

10455:

10357:

10316:

10247:

10132:

10099:

10051:

10041:

10020:

10015:

9894:

9861:

9273:

9251:

9000:

8995:

8905:

8685:

Meade, Nigel; Islam, Towhidul (1995). "Prediction intervals for growth curve forecasts".

7889:

7864:

7718:

7472:

7419:

7345:

7341:

7309:

can be invoked such that hypothesis testing may proceed using asymptotic approximations.

6030:

5664:

5638:

3702:

3594:

3489:

3459:

3015:

2770:

2036:

are assumed to be free of error. This important assumption is often overlooked, although

1600:

1562:

1510:

1478:

1474:

1107:

1043:

1014:

919:

745:

664:

650:

625:

527:

487:

316:

217:

212:

166:

115:

105:

9757:

8106:(Galton uses the term "regression" in this paper, which discusses the height of humans.)

7633:), then the maximum number of independent variables the model can support is 4, because

6793:

4893:, is the difference between the value of the dependent variable predicted by the model,

4817:{\displaystyle {\widehat {y}}_{i}={\widehat {\beta }}_{0}+{\widehat {\beta }}_{1}x_{i}.}

3486:. Alternatively, one can visualize infinitely many 3-dimensional planes that go through

13478:

13061:

12910:

12639:

12450:

12304:

12200:

12149:

12025:

11922:

11906:

11883:

11660:

11394:

11377:

11337:

11248:

11143:

11105:

11076:

11036:

10996:

10942:

10859:

10545:

10540:

10328:

10232:

10221:

10046:

9658:

9236:

8827:

8774:

8747:

8387:

8314:

8237:

8224:

8204:

8185:

8141:

8072:

7784:

7616:

7596:

7575:

7555:

7535:

7415:

7381:

7373:

7242:{\displaystyle \mathbf {{\hat {\boldsymbol {\beta }}}=(X^{\top }X)^{-1}X^{\top }Y} .\,}

7068:

6935:

6888:

6868:

6378:

6288:

6268:

6248:

6064:

5577:

5442:

5393:

4708:

4378:{\displaystyle y_{i}=\beta _{0}+\beta _{1}x_{i}+\varepsilon _{i},\quad i=1,\dots ,n.\!}

4178:

4160:

3522:

2821:

2162:

2088:

1691:

1558:

1450:

1406:

1085:

1009:

795:

590:

421:

150:

135:

6285:-th independent variable. If the first independent variable takes the value 1 for all

13640:

13635:

13563:

13512:

13286:

13266:

13254:

13214:

13185:

13153:

13071:

12964:

12932:

12828:

12768:

12634:

12545:

12515:

12507:

12327:

12318:

12243:

12174:

12030:

12015:

11990:

11878:

11819:

11685:

11673:

11299:

11216:

11160:

11083:

10927:

10849:

10628:

10502:

10323:

10283:

10010:

9920:

9911:

9724:

9712:

9516:

9168:

9039:

9032:

8830:– how linear regression mistakes can appear when Y-range is much smaller than X-range

8779:

8717:

8675:

8650:

8621:

8594:

8525:

8474:

8446:

8391:

8350:

8268:

8257:

8029:

7938:

7884:

7874:

7803:

7778:

7385:

7353:

6056:

5539:

4120:

3982:

3857:

Deviations from the model have an expected value of zero, conditional on covariates:

3744:

3621:

in the model. Moreover, to estimate a least squares model, the independent variables

2903:. Suppose further that the researcher wants to estimate a bivariate linear model via

2337:

1584:

1462:

1446:

1402:

1394:

1178:

1021:

934:

730:

700:

645:

640:

595:

537:

416:

207:

110:

64:

8555:

6780:{\displaystyle \mathbf {(X^{\top }X){\hat {\boldsymbol {\beta }}}={}X^{\top }Y} ,\,}

5347:{\displaystyle {\widehat {\beta }}_{0}={\bar {y}}-{\widehat {\beta }}_{1}{\bar {x}}}

13805:

13558:

13517:

13329:

13304:

13116:

13046:

12890:

12675:

12570:

12525:

12289:

12276:

12169:

12144:

12078:

12010:

11888:

11496:

11389:

11322:

11235:

11182:

11001:

10872:

10666:

10550:

10465:

10432:

9979:

9846:

9469:

9459:

9266:

9060:

9010:

9005:

8948:

8936:

8769:

8759:

8694:

8515:

8507:

8379:

8347:

Geographically weighted regression: the analysis of spatially varying relationships

8304:

8252:

8232:

8216:

8177:

8133:

8094:(Galton uses the term "reversion" in this paper, which discusses the size of peas.)

8062:

7894:

7834:

7357:

5112:

In the case of simple regression, the formulas for the least squares estimates are

3944:

1632:

1628:

1604:

1566:

1501:

1206:

959:

909:

819:

803:

773:

635:

630:

580:

570:

468:

232:

161:

8821:

2340:, different forms of regression analysis provide tools to estimate the parameters

2333:

to be a reasonable approximation for the statistical process generating the data.

13866:

13568:

13371:

13364:

13299:

13239:

13121:

13111:

13041:

13014:

12999:

12954:

12944:

12895:

12487:

12231:

12093:

12020:

11695:

11569:

11542:

11519:

11488:

11115:

11110:

11064:

10794:

10445:

10262:

10168:

10109:

10104:

9582:

9526:

9348:

8990:

8910:

8575:

7978:

7698:

7451:

the range of values in the dataset used for model-fitting is known informally as

7277:

of the estimated parameters. Commonly used checks of goodness of fit include the

7270:

7269:

Once a regression model has been constructed, it may be important to confirm the

6034:

5387:

4014:

can lead to reasonable estimates independent variables are measured with errors.

3986:

3124:

that explain the data equally well: any combination can be chosen that satisfies

1234:

1038:

904:

844:

393:

100:

11977:

13553:

13339:

13276:

13261:

13244:

13202:

13004:

12885:

12753:

12730:

12720:

12436:

12431:

10894:

10824:

10470:

10206:

9556:

9521:

9511:

9336:

9094:

8920:

8732:

8309:

8089:

7859:

7365:

3456:. To understand why there are infinitely many options, note that the system of

1542:

1254:

785:

522:

145:

4132:

In linear regression, the model specification is that the dependent variable,

13954:

13861:

13740:

13548:

13522:

13399:

13359:

13324:

13314:

13294:

13036:

12994:

12969:

12917:

12905:

12900:

12809:

12593:

12560:

12423:

12384:

12195:

12164:

11628:

11582:

11187:

10889:

10716:

10480:

10475:

10278:

9808:

9788:

9501:

9481:

9398:

9077:

8613:

8181:

7798:

7755:

7463:

7453:

7361:

7324:

or are variables constrained to fall only in a certain range, often arise in

3516:

3117:{\displaystyle ({\hat {\beta }}_{0},{\hat {\beta }}_{1},{\hat {\beta }}_{2})}

2904:

2361:

1470:

1173:

1102:

984:

715:

600:

264:

140:

27:

Set of statistical processes for estimating the relationships among variables

8158:

8067:

8050:

1557:

to a more general statistical context. In the work of Yule and

Pearson, the

13881:

13309:

13158:

13148:

13126:

13056:

12949:

12863:

12535:

12468:

12445:

12360:

11690:

10986:

10884:

10819:

10761:

10746:

10683:

10638:

9587:

9418:

8833:

8783:

8698:

8602:

8579:

8466:

8159:

8117:

8092:. "Typical laws of heredity", Nature 15 (1877), 492–495, 512–514, 532–533.

7337:

7325:

4960:. This method obtains parameter estimates that minimize the sum of squared

4108:

3978:

1554:

130:

8552:

Proc. International

Conference on Computer Analysis of Images and Patterns

7726:, requires a large number of observations and is computationally intensive

2040:

can be used when the independent variables are assumed to contain errors.

13876:

13573:

13271:

13180:

13143:

12707:

12578:

12540:

12223:

12124:

11986:

11799:

11766:

11258:

11175:

11170:

10814:

10771:

10751:

10731:

10721:

10490:

9866:

9683:

9454:

9363:

9358:

8980:

8958:

8764:

8511:

8409:

7808:

7763:

4107:

within geographic units can have important consequences. The subfield of

1588:

1497:

979:

473:

176:

125:

8318:

7372:

may be used when the dependent variable is only sometimes observed, and

3005:{\displaystyle Y_{i}=\beta _{0}+\beta _{1}X_{1i}+\beta _{2}X_{2i}+e_{i}}

13502:

13224:

11424:

10904:

10604:

10535:

10485:

10460:

10380:

9577:

9536:

9531:

9444:

9353:

9261:

9173:

9153:

8228:

8189:

8168:

8145:

8076:

3814: in this section. Unsourced material may be challenged and removed.

1493:

1458:

1128:

824:

750:

8423:

8104:

Francis Galton. Presidential address, Section H, Anthropology. (1885)

7958:

7285:

and hypothesis testing. Statistical significance can be checked by an

5528:{\displaystyle {\hat {\sigma }}_{\varepsilon }^{2}={\frac {SSR}{n-2}}}

1504:. Second, in some situations regression analysis can be used to infer

30:

13600:

13419:

12989:

12870:

11577:

11429:

11049:

10844:

10756:

10741:

10736:

10701:

9572:

9541:

9439:

9283:

9246:

9183:

9137:

9132:

9117:

8264:

7278:

5594:

1550:

1505:

1287:

1068:

8520:

8383:

8345:

Fotheringham, A. Stewart; Brunsdon, Chris; Charlton, Martin (2002).

8220:

8163:

8137:

8121:

7424:

5104:

3789:

13466:

13219:

12853:

12823:

11093:

10711:

10588:

10583:

10578:

9474:

9306:

8815:

3452:

and are therefore valid solutions that minimize the sum of squared

2466:. A given regression method will ultimately provide an estimate of

2179:

is chosen. For example, a simple univariate regression may propose

13138:

12598:

12299:

9597:

9434:

9388:

9311:

9211:

9206:

9158:

8545:"Human age estimation by metric learning for regression problems"

7828:

3482:

equations is to be solved for 3 unknowns, which makes the system

1753:

directly observed in data and are often denoted using the scalar

1063:

12778:

7980:

Nouvelles méthodes pour la détermination des orbites des comètes

7762:

and multiple regression using least squares can be done in some

3617:

appears often in regression analysis, and is referred to as the

1715:, which are observed in data and often denoted using the scalar

13433:

12520:

11501:

11475:

11455:

10706:

10497:

9612:

9592:

9464:

9256:

8746:

Chicco, Davide; Warrens, Matthijs J.; Jurman, Giuseppe (2021).

8665:

Applied

Regression Analysis, Linear Models and Related Methods.

7717:, which is more robust in the presence of outliers, leading to

7409:

7301:

7297:

7290:

7286:

5094:{\displaystyle {\widehat {\beta }}_{0},{\widehat {\beta }}_{1}}

3038:

data points, then they could find infinitely many combinations

2838:

rows of data with one dependent and two independent variables:

1661:, which are observed in data and are often denoted as a vector

814:

8011:

Theoria combinationis observationum erroribus minimis obnoxiae

7336:. Nonlinear models for binary dependent variables include the

2660:, least squares is widely used because the estimated function

13595:

10349:

9413:

9393:

9383:

9378:

9373:

9368:

9331:

9163:

8707:

Regression

Analysis — Theory, Methods, and Applications

1058:

1053:

780:

8344:

2773:) are useful when researchers want to model other functions

13721:

Committee on the

Environment, Public Health and Food Safety

13527:

10440:

9403:

7995:

Chapter 1 of: Angrist, J. D., & Pischke, J. S. (2008).

1561:

of the response and explanatory variables is assumed to be

8740:

Operations and

Production Systems with Multiple Objectives

4129:

for a derivation of these formulas and a numerical example

2250:{\displaystyle f(X_{i},\beta )=\beta _{0}+\beta _{1}X_{i}}

1500:, where its use has substantial overlap with the field of

9787:

8473:(3rd ed.). Hoboken, New Jersey: Wiley. p. 211.

6061:

In the more general multiple regression model, there are

4074:

clustered standard errors, geographic weighted regression

1421:

in machine learning parlance) and one or more error-free

8729:

Many

Regression Algorithms, One Unified Model: A Review.

6712:

In matrix notation, the normal equations are written as

6375:

The least squares parameter estimates are obtained from

3851:

The sample is representative of the population at large.

2656:

will depend on context and their goals. As described in

1346:

List of datasets in computer vision and image processing

8455:

page 274 section 9.7.4 "interpolation vs extrapolation"

7997:

Mostly Harmless Econometrics: An Empiricist's Companion

7678:{\displaystyle {\frac {\log 1000}{\log 5}}\approx 4.29}

7404:

Differences between linear and non-linear least squares

3997:

assumptions imply that the parameter estimates will be

2584:{\displaystyle {\hat {Y_{i}}}=f(X_{i},{\hat {\beta }})}

1569:

in his works of 1922 and 1925. Fisher assumed that the

8731:

Neural Networks, vol. 69, Sept. 2015, pp. 60–79.

8245:

2326:{\displaystyle Y_{i}=\beta _{0}+\beta _{1}X_{i}+e_{i}}

8369:

8349:(Reprint ed.). Chichester, England: John Wiley.

8333:

Regressions: Why Are Economists Obessessed with Them?

7707:

Percentage regression, for situations where reducing

7642:

7619:

7599:

7578:

7558:

7538:

7505:

7494:

7175:

7146:

7117:

7091:

7071:

7045:

7023:

6987:

6958:

6938:

6911:

6891:

6871:

6841:

6819:

6796:

6721:

6533:

6404:

6381:

6347:

6311:

6291:

6271:

6251:

6221:

6090:

6067:

5823:

5703:

5667:

5641:

5603:

5580:

5548:

5471:

5445:

5416:

5396:

5363:

5275:

5121:

5049:

4977:

4935:

4899:

4837:

4737:

4711:

4684:

4652:

4625:

4598:

4571:

4433:

4397:

4286:

4255:

4228:

4201:

4181:

4138:

4086:

4051:

4024:

3956:

3922:

3863:

3854:

The independent variables are measured with no error.

3753:

3719:

3627:

3597:

3571:

3545:

3525:

3492:

3462:

3258:

3130:

3044:

3018:

2913:

2844:

2824:

2779:

2720:

2666:

2626:

2597:

2524:

2492:

2472:

2394:

2374:

2346:

2263:

2185:

2165:

2138:

2111:

2091:

2049:

2015:

1944:

1914:

1883:

1863:

1836:

1801:

1759:

1721:

1694:

1667:

1640:

12705:

12262:

Autoregressive conditional heteroskedasticity (ARCH)

8162:; Yule, G.U.; Blanchard, Norman; Lee, Alice (1903).

7774:

2459:{\displaystyle \sum _{i}(Y_{i}-f(X_{i},\beta ))^{2}}

1587:, regression involving correlated responses such as

8745:

8471:

Common Errors in Statistics (And How to Avoid Them)

11724:

8256:

7677:

7625:

7605:

7584:

7564:

7544:

7524:

7241:

7158:

7132:

7103:

7077:

7057:

7031:

7009:

6973:

6944:

6924:

6897:

6877:

6857:

6827:

6805:

6779:

6701:

6509:

6387:

6360:

6333:

6297:

6277:

6257:

6237:

6204:

6073:

6018:

5806:

5679:

5653:

5627:

5586:

5566:

5527:

5451:

5431:

5402:

5378:

5346:

5260:

5093:

5039:Minimization of this function results in a set of

5028:

4948:

4921:

4885:

4816:

4717:

4697:

4668:

4638:

4611:

4584:

4554:

4415:

4377:

4268:

4241:

4214:

4187:

4151:

4099:

4064:

4037:

3969:

3935:

3906:

3768:

3735:

3693:

3609:

3583:

3557:

3531:

3504:

3474:

3444:

3244:

3116:

3030:

3004:

2895:

2830:

2807:

2757:

2703:

2648:

2612:

2583:

2507:

2478:

2458:

2380:

2352:

2325:

2249:

2171:

2151:

2124:

2097:

2077:

2043:The researchers' goal is to estimate the function

2028:

1998:

1927:

1896:

1869:

1849:

1814:

1772:

1734:

1700:

1680:

1646:

1445:). The most common form of regression analysis is

7930:

7209:

7192:

7183:

6748:

6739:

6723:

6395:normal equations. The residual can be written as

4551:

4374:

1908:that may stand in for un-modeled determinants of

1449:, in which one finds the line (or a more complex

13952:

8251:

7754:All major statistical software packages perform

4929:, and the true value of the dependent variable,

11810:Multivariate adaptive regression splines (MARS)

8709:, Springer-Verlag, Berlin, 2011 (4th printing).

8605:(1987). "Regression and correlation analysis,"

8028:. Kendall/Hunt Publishing Company. p. 59.

5108:Illustration of linear regression on a data set

4195:data points there is one independent variable:

1788:, different terminologies are used in place of

8591:Evan J. Williams, "I. Regression," pp. 523–41.

7959:Criticism and Influence Analysis in Regression

7312:

4886:{\displaystyle e_{i}=y_{i}-{\widehat {y}}_{i}}

1465:), this allows the researcher to estimate the

1341:List of datasets for machine-learning research

12794:

12691:

10365:

9773:

8849:

8542:

8500:Journal of Modern Applied Statistical Methods

7831:(a linear least squares estimation algorithm)

7410:Prediction (interpolation and extrapolation)

7133:{\displaystyle {\hat {\boldsymbol {\beta }}}}

6974:{\displaystyle {\hat {\boldsymbol {\beta }}}}

4016:Heteroscedasticity-consistent standard errors

1374:

441:

8863:

8733:https://doi.org/10.1016/j.neunet.2015.05.005

8636:Journal of Business and Economic Statistics,

5029:{\displaystyle SSR=\sum _{i=1}^{n}e_{i}^{2}}

8644:

7924:

7572:is the number of independent variables and

2336:Once researchers determine their preferred

1999:{\displaystyle Y_{i}=f(X_{i},\beta )+e_{i}}

13756:Centers for Disease Control and Prevention

12801:

12787:

12698:

12684:

10410:

10372:

10358:

9780:

9766:

8856:

8842:

8684:

8597:, "II. Analysis of Variance," pp. 541–554.

8465:

8051:"Kinship and Correlation (reprinted 1989)"

3694:{\displaystyle (X_{1i},X_{2i},...,X_{ki})}

2257:, suggesting that the researcher believes

1381:

1367:

448:

434:

34:Regression line for 50 random points in a

13716:Centre for Disease Prevention and Control

13706:Center for Disease Control and Prevention

11023:

8773:

8763:

8519:

8308:

8236:

8066:

7360:with more than two values, there are the

7238:

6776:

6698:

6201:

3830:Learn how and when to remove this message

3779:

2388:that minimizes the sum of squared errors

8584:International Encyclopedia of Statistics

8493:

8259:Statistical Methods for Research Workers

8209:Journal of the Royal Statistical Society

8126:Journal of the Royal Statistical Society

7423:

7320:, which are response variables that are

5694:of the parameter estimates are given by

5103:

2704:{\displaystyle f(X_{i},{\hat {\beta }})}

1576:In the 1950s and 1960s, economists used

1521:The earliest form of regression was the

29:

13761:Health departments in the United States

10289:Numerical smoothing and differentiation

8607:New Palgrave: A Dictionary of Economics

8287:

7991:

7989:

7968:

7934:Statistical Models: Theory and Practice

7845:Multivariate adaptive regression spline

7391:

7352:with more than two values there is the

7180:

7121:

6962:

6745:

6044:

5635:if an intercept is used. In this case,

3012:. If the researcher only has access to

2765:. However, alternative variants (e.g.,

14:

13953:

13766:Council on Education for Public Health

12336:Kaplan–Meier estimator (product limit)

8425:Probability, Statistics and Estimation

8421:

8202:

8048:

8023:

3981:with one another. Mathematically, the

1401:is a set of statistical processes for

13824:Professional degrees of public health

13731:Ministry of Health and Family Welfare

12782:

12679:

12409:

11976:

11723:

11022:

10792:

10409:

10353:

9761:

8837:

8822:What is multiple regression used for?

8496:"Least Squares Percentage Regression"

8002:

3747:and therefore that a unique solution

2896:{\displaystyle (Y_{i},X_{1i},X_{2i})}

38:around the line y=1.5x+2 (not shown)

13921:

13814:Bachelor of Science in Public Health

12646:

12346:Accelerated failure time (AFT) model

9824:Iteratively reweighted least squares

9694:Generative adversarial network (GAN)

8828:Regression of Weakly Correlated Data

8116:

7986:

4114:

3812:adding citations to reliable sources

3783:

2364:(including its most common variant,

2009:Note that the independent variables

1795:Most regression models propose that

1541:The term "regression" was coined by

13933:

13082:Workers' right to access the toilet

12923:Human right to water and sanitation

12658:

11941:Analysis of variance (ANOVA, anova)

10793:

8582:, ed. (1978), "Linear Hypotheses,"

7931:David A. Freedman (27 April 2009).

7758:regression analysis and inference.

7747:For a more comprehensive list, see

7461:this range of the data is known as

7443:variable given known values of the

7010:{\displaystyle {\hat {\beta }}_{j}}

4705:is an error term and the subscript

4423:to the preceding regression gives:

3847:. These assumptions often include:

3539:distinct parameters, one must have

1790:dependent and independent variables

1610:

1336:Glossary of artificial intelligence

24:

12036:Cochran–Mantel–Haenszel statistics

10662:Pearson product-moment correlation

9842:Pearson product-moment correlation

8727:Stulp, Freek, and Olivier Sigaud.

8569:

8026:Second-Semester Applied Statistics

7711:errors is deemed more appropriate.

7495:Power and sample size calculations

7226:

7200:

6764:

6731:

4922:{\displaystyle {\widehat {y}}_{i}}

4725:indexes a particular observation.

1578:electromechanical desk calculators

1565:. This assumption was weakened by

25:

13972:

13355:Commercial determinants of health

12808:

8791:

8618:Alternative Methods of Regression

8404:Steel, R.G.D, and Torrie, J. H.,

7957:R. Dennis Cook; Sanford Weisberg

4592:, it is linear in the parameters

3943:is constant across observations (

13932:

13920:

13909:

13908:

12938:National public health institute

12657:

12645:

12633:

12620:

12619:

12410:

10322:

9732:

9731:

9711:

8672:Applied Nonparametric Regression

8645:Draper, N.R.; Smith, H. (1998).

8335:March 2006. Accessed 2011-12-03.

7850:Multivariate normal distribution

7814:Fraction of variance unexplained

7777:

7689:

7289:of the overall fit, followed by

7231:

7222:

7216:

7213:

7205:

7196:

7189:

7025:

6821:

6769:

6760:

6754:

6736:

6727:

4698:{\displaystyle \varepsilon _{i}}

3907:{\displaystyle E(e_{i}|X_{i})=0}

3788:

415:

13335:Open-source healthcare software

13077:Sociology of health and illness

12751:Associative (causal) forecasts

12295:Least-squares spectral analysis

8536:

8487:

8459:

8443:Statistical methods of analysis

8435:

8415:

8398:

8363:

8338:

8325:

8281:

8196:

8164:"The Law of Ancestral Heredity"

8152:

8110:

8098:

7855:Pearson correlation coefficient

7265:Category:Regression diagnostics

4349:

4167:(but need not be linear in the

3799:needs additional citations for

3769:{\displaystyle {\hat {\beta }}}

2808:{\displaystyle f(X_{i},\beta )}

2613:{\displaystyle {\hat {\beta }}}

2508:{\displaystyle {\hat {\beta }}}

2078:{\displaystyle f(X_{i},\beta )}

363:Least-squares spectral analysis

301:Generalized estimating equation

121:Multinomial logistic regression

96:Vector generalized linear model

13696:Caribbean Public Health Agency

13508:Sexually transmitted infection

13405:Statistical hypothesis testing

13166:Occupational safety and health

13067:Sexual and reproductive health

12980:Occupational safety and health

11276:Mean-unbiased minimum-variance

10379:

9644:Recurrent neural network (RNN)

9634:Differentiable neural computer

8818:– basic history and references

8632:Calculating Interval Forecasts

8122:"On the Theory of Correlation"

8083:

8042:

8017:

7965:, Vol. 13. (1982), pp. 313–361

7951:

7937:. Cambridge University Press.

7913:

7252:

7124:

6995:

6965:

6609:

6479:

6438:

5965:

5941:

5934:

5912:

5896:

5860:

5831:

5791:

5784:

5762:

5740:

5711:

5622:

5604:

5561:

5549:

5479:

5423:

5370:

5338:

5304:

5246:

5239:

5217:

5209:

5203:

5181:

5178:

5172:

5150:

4956:. One method of estimation is

3916:The variance of the residuals

3895:

3881:

3867:

3760:

3713:, this condition ensures that

3688:

3628:

3515:More generally, to estimate a

3427:

3423:

3398:

3363:

3341:

3331:

3316:

3306:

3276:

3217:

3182:

3160:

3138:

3111:

3099:

3077:

3055:

3045:

2890:

2845:

2802:

2783:

2758:{\displaystyle E(Y_{i}|X_{i})}

2752:

2738:

2724:

2698:

2692:

2670:

2649:{\displaystyle {\hat {Y_{i}}}}

2640:

2604:

2578:

2572:

2550:

2538:

2499:

2447:

2443:

2424:

2405:

2208:

2189:

2072:

2053:

1980:

1961:

756:Relevance vector machine (RVM)

13:

1:

13350:Social determinants of health

12589:Geographic information system

11805:Simultaneous equations models

9689:Variational autoencoder (VAE)

9649:Long short-term memory (LSTM)

8916:Computational learning theory

7999:. Princeton University Press.

7906:

7840:Modifiable areal unit problem

7281:, analyses of the pattern of

6885:element of the column vector

6055:For a numerical example, see

1935:or random statistical noise:

1457:computes the unique line (or

1245:Computational learning theory

809:Expectation–maximization (EM)

182:Nonlinear mixed-effects model

13410:Analysis of variance (ANOVA)

13171:Human factors and ergonomics

12736:Decomposition of time series

11772:Coefficient of determination

11383:Uniformly most powerful test

10312:Regression analysis category

10202:Response surface methodology

9669:Convolutional neural network

8649:(3rd ed.). John Wiley.

7920:Necessary Condition Analysis

7749:List of statistical software

7032:{\displaystyle \mathbf {X} }

6828:{\displaystyle \mathbf {X} }

1483:Necessary Condition Analysis

1405:the relationships between a

1202:Coefficient of determination

1049:Convolutional neural network

761:Support vector machine (SVM)

7:

13591:Good manufacturing practice

13395:Randomized controlled trial

12341:Proportional hazards models

12285:Spectral density estimation

12267:Vector autoregression (VAR)

11701:Maximum posterior estimator

10933:Randomized controlled trial

10184:Frisch–Waugh–Lovell theorem

10154:Mean and predicted response

9664:Multilayer perceptron (MLP)

8804:Encyclopedia of Mathematics

8647:Applied Regression Analysis

7770:

7742:

7318:Limited dependent variables

7313:Limited dependent variables

4669:{\displaystyle \beta _{2}.}

4045:to change across values of

1534:including a version of the

1353:Outline of machine learning

1250:Empirical risk minimization

384:Mean and predicted response

10:

13977:

13661:Theory of planned behavior

13586:Good agricultural practice

13491:Public health surveillance

13383:epidemiological statistics

13027:Public health intervention

12717:Historical data forecasts

12101:Multivariate distributions

10521:Average absolute deviation

9834:Correlation and dependence

9740:Artificial neural networks

9654:Gated recurrent unit (GRU)

8880:Differentiable programming

8372:Environment and Planning A

8310:10.1214/088342305000000331

8024:Mogull, Robert G. (2004).

7746:

7703:Bayesian linear regression

7413:

7395:

7370:Censored regression models

7293:of individual parameters.

7262:

7256:

6361:{\displaystyle \beta _{1}}

6054:

6048:

5432:{\displaystyle {\bar {y}}}

5379:{\displaystyle {\bar {x}}}

4639:{\displaystyle \beta _{1}}

4612:{\displaystyle \beta _{0}}

4269:{\displaystyle \beta _{1}}

4242:{\displaystyle \beta _{0}}

4124:

4118:

3983:variance–covariance matrix

2038:errors-in-variables models

1547:regression toward the mean

1516:

990:Feedforward neural network

741:Artificial neural networks

177:Linear mixed-effects model

13904:

13839:

13798:

13783:World Toilet Organization

13778:World Health Organization

13685:

13674:

13611:

13536:

13452:

13380:

13345:Public health informatics

13285:

13090:

13052:Right to rest and leisure

12881:Globalization and disease

12816:

12749:

12715:

12615:

12569:

12506:

12459:

12422:

12418:

12405:

12377:

12359:

12326:

12317:

12275:

12222:

12183:

12132:

12123:

12089:Structural equation model

12044:

12001:

11997:

11972:

11931:

11897:

11851:

11818:

11780:

11747:

11743:

11719:

11659:

11568:

11487:

11451:

11442:

11425:Score/Lagrange multiplier

11410:

11363:

11308:

11234:

11225:

11035:

11031:

11018:

10977:

10951:

10903:

10858:

10840:Sample size determination

10805:

10801:

10788:

10692:

10647:

10621:

10603:

10559:

10511:

10431:

10422:

10418:

10405:

10387:

10307:

10271:

10220:

10192:

10179:Minimum mean-square error

10146:

10092:

10066:Decomposition of variance

10064:

10029:

9988:

9970:Growth curve (statistics)

9957:

9939:Generalized least squares

9919:

9908:

9875:

9832:

9799:

9707:

9621:

9565:

9494:

9427:

9299:

9199:

9192:

9146:

9110:

9073:Artificial neural network

9053:

8929:

8896:Automatic differentiation

8869:

8816:Earliest Uses: Regression

7734:interval predictor models

7715:Least absolute deviations

7159:{\displaystyle p\times 1}

7104:{\displaystyle n\times 1}

7058:{\displaystyle n\times p}

4416:{\displaystyle x_{i}^{2}}

3565:distinct data points. If

2767:least absolute deviations

1525:, which was published by

973:Artificial neural network

343:Least absolute deviations

13829:Schools of public health

13621:Diffusion of innovations

13320:Health impact assessment

13032:Public health laboratory

12928:Management of depression

12759:Simple linear regression

12584:Environmental statistics

12106:Elliptical distributions

11899:Generalized linear model

11828:Simple linear regression

11598:Hodges–Lehmann estimator

11055:Probability distribution

10964:Stochastic approximation

10526:Coefficient of variation

10037:Generalized linear model

9929:Simple linear regression

9819:Non-linear least squares

9801:Computational statistics

8901:Neuromorphic engineering

8864:Differentiable computing

8742:. John Wiley & Sons.

8609:, v. 4, pp. 120–23.

8469:; Hardin, J. W. (2009).

8422:Rouaud, Mathieu (2013).

8049:Galton, Francis (1989).

7963:Sociological Methodology

7824:Generalized linear model

7760:Simple linear regression

7724:Nonparametric regression

7334:linear probability model

7275:statistical significance

6334:{\displaystyle x_{i1}=1}

4173:simple linear regression

4127:simple linear regression

1597:nonparametric regression

1571:conditional distribution

1487:nonparametric regression

1282:Journals and conferences

1229:Mathematical foundations

1139:Temporal difference (TD)

995:Recurrent neural network

915:Conditional random field

838:Dimensionality reduction

586:Dimensionality reduction

548:Quantum machine learning

543:Neuromorphic engineering

503:Self-supervised learning

498:Semi-supervised learning

91:Generalized linear model

13892:Social hygiene movement

13819:Doctor of Public Health

13651:Social cognitive theory

13453:Infectious and epidemic

13235:Fecal–oral transmission

12244:Cross-correlation (XCF)

11852:Non-standard predictors

11286:Lehmann–Scheffé theorem

10959:Adaptive clinical trial

9674:Residual neural network

9090:Artificial Intelligence

8705:A. Sen, M. Srivastava,

8554:: 74–82. Archived from

8290:"Fisher and Regression"

7900:Linear trend estimation

7525:{\displaystyle N=m^{n}}

6265:-th observation on the

6081:independent variables:

5628:{\displaystyle (n-p-1)}

3558:{\displaystyle N\geq k}

3252:, all of which lead to

2713:conditional expectation

2620:or the predicted value

1708:denotes a row of data).

1523:method of least squares

1467:conditional expectation

691:Apprenticeship learning

13887:Germ theory of disease

13666:Transtheoretical model

12640:Mathematics portal

12461:Engineering statistics

12369:Nelson–Aalen estimator

11946:Analysis of covariance

11833:Ordinary least squares

11757:Pearson product-moment

11161:Statistical functional

11072:Empirical distribution

10905:Controlled experiments

10634:Frequency distribution

10412:Descriptive statistics

10329:Mathematics portal

10253:Orthogonal polynomials

10079:Analysis of covariance

9944:Weighted least squares

9934:Ordinary least squares

9885:Ordinary least squares

8752:PeerJ Computer Science

8738:Malakooti, B. (2013).

8699:10.1002/for.3980140502

8687:Journal of Forecasting

8630:Chatfield, C. (1993) "

8543:YangJing Long (2009).

8288:Aldrich, John (2005).

8182:10.1093/biomet/2.2.211

7819:Function approximation

7679:

7627:

7607:

7586:

7566:

7546:

7526:

7447:variables. Prediction

7430:

7378:polychoric correlation

7259:Regression diagnostics

7243:

7160:

7134:

7105:

7079:

7059:

7033:

7011:

6975:

6946:

6926:

6899:

6879:

6859:

6858:{\displaystyle x_{ij}}

6829:

6807:

6781:

6703:

6644:

6575:

6554:

6511:

6389:

6362:

6335:

6299:

6279:

6259:

6239:

6238:{\displaystyle x_{ij}}

6206:

6075:

6049:For a derivation, see

6020:

5808:

5681:

5661:so the denominator is

5655:

5629:

5588:

5568:

5529:

5453:

5433:

5404:

5380:

5348:

5262:

5109:

5095:

5030:

5010:

4958:ordinary least squares

4950:

4923:

4887:

4818:

4719:

4699:

4670:

4640:

4613:

4586:

4556:

4417:

4379:

4270:

4243:

4222:, and two parameters,

4216:

4189:

4153:

4101:

4066:

4039:

4018:allow the variance of

3971:

3937:

3908:

3780:Underlying assumptions

3770:

3737:

3736:{\displaystyle X^{T}X}

3711:ordinary least squares

3695:

3611:

3585:

3584:{\displaystyle N>k}

3559:

3533:

3506:

3476:

3446:

3246:

3118:

3032:

3006:

2897:

2832:

2809:

2759:

2705:

2658:ordinary least squares

2650:

2614:

2585:

2509:

2480:

2479:{\displaystyle \beta }

2460:

2382:

2381:{\displaystyle \beta }

2366:ordinary least squares

2354:

2353:{\displaystyle \beta }

2327:

2251:

2173:

2153:

2126:

2099:

2079:

2030:

2000:

1929:

1898:

1871:

1870:{\displaystyle \beta }

1851:

1816:

1774:

1736:

1702:

1682:

1648:

1647:{\displaystyle \beta }

1617:ordinary least squares

1455:ordinary least squares

1240:Bias–variance tradeoff

1122:Reinforcement learning

1098:Spiking neural network

508:Reinforcement learning

422:Mathematics portal

348:Iteratively reweighted

39:

18:Statistical regression

13771:Public Health Service

13656:Social norms approach

13646:PRECEDE–PROCEED model

13092:Preventive healthcare

12985:Pharmaceutical policy

12834:Chief Medical Officer

12726:Exponential smoothing

12556:Population statistics

12498:System identification

12232:Autocorrelation (ACF)

12160:Exponential smoothing

12074:Discriminant analysis

12069:Canonical correlation

11933:Partition of variance

11795:Regression validation

11639:(Jonckheere–Terpstra)

11538:Likelihood-ratio test

11227:Frequentist inference

11139:Location–scale family

11060:Sampling distribution

11025:Statistical inference

10992:Cross-sectional study

10979:Observational studies

10938:Randomized experiment

10767:Stem-and-leaf display

10569:Central limit theorem

10294:System identification

10258:Chebyshev polynomials

10243:Numerical integration

10194:Design of experiments

10138:Regression validation

9965:Polynomial regression

9890:Partial least squares

9629:Neural Turing machine

9217:Human image synthesis

8824:– Multiple regression

8799:"Regression analysis"

8494:Tofallis, C. (2009).

8203:Fisher, R.A. (1922).

8068:10.1214/ss/1177012581

7870:Regression validation

7730:Scenario optimization

7680:

7628:

7608:

7587:

7567:

7547:

7527:

7427:

7414:Further information:

7350:categorical variables

7322:categorical variables

7307:central limit theorem

7273:of the model and the

7244:

7161:

7135:

7106:

7080:

7060:

7034:

7012:

6976:

6947:

6927:

6925:{\displaystyle y_{i}}

6900:

6880:

6860:

6830:

6808:

6782:

6704:

6624:

6555:

6534:

6512:

6390:

6363:

6336:

6300:

6280:

6260:

6240:

6207:

6076:

6039:population parameters

6021:

5809:

5682:

5656:

5630:

5589:

5569:

5567:{\displaystyle (n-p)}

5530:

5454:

5434:

5405:

5381:

5349:

5263:

5107:

5096:

5031:

4990:

4951:

4949:{\displaystyle y_{i}}

4924:

4888:

4819:

4720:

4700:

4671:

4641:

4614:

4587:

4585:{\displaystyle x_{i}}

4557:

4418:

4380:

4271:

4244:

4217:

4215:{\displaystyle x_{i}}

4190:

4169:independent variables

4154:

4152:{\displaystyle y_{i}}

4102:

4100:{\displaystyle e_{i}}

4067:

4065:{\displaystyle X_{i}}

4040:

4038:{\displaystyle e_{i}}

3972:

3970:{\displaystyle e_{i}}

3938:

3936:{\displaystyle e_{i}}

3909:

3771:

3738:

3696:

3612:

3586:

3560:

3534:

3507:

3477:

3447:

3247:

3119:

3033:

3007:

2898:

2833:

2810:

2760:

2706:

2651:

2615:

2586:

2510:

2481:

2461:

2383:

2368:) finds the value of

2355:

2328:

2252:

2174:

2154:

2152:{\displaystyle X_{i}}

2127:

2125:{\displaystyle Y_{i}}

2100:

2080:

2031:

2029:{\displaystyle X_{i}}

2001:

1930:

1928:{\displaystyle Y_{i}}

1899:

1897:{\displaystyle e_{i}}

1872:

1852:

1850:{\displaystyle X_{i}}

1817:

1815:{\displaystyle Y_{i}}

1786:fields of application

1775:

1773:{\displaystyle e_{i}}

1737:

1735:{\displaystyle Y_{i}}

1703:

1683:

1681:{\displaystyle X_{i}}

1659:independent variables

1649:

1627:, often denoted as a

1439:explanatory variables

1423:independent variables

1076:Neural radiance field

898:Structured prediction

621:Structured prediction

493:Unsupervised learning

379:Regression validation

358:Bayesian multivariate

75:Polynomial regression

36:Gaussian distribution

33:

13847:Sara Josephine Baker

13746:Public Health Agency

13631:Health communication

13496:Disease surveillance

13462:Asymptomatic carrier

13444:Statistical software

13132:Preventive nutrition

12960:Medical anthropology

12849:Environmental health

12479:Probabilistic design

12064:Principal components

11907:Exponential families

11859:Nonlinear regression

11838:General linear model

11800:Mixed effects models

11790:Errors and residuals

11767:Confounding variable

11669:Bayesian probability

11647:Van der Waerden test

11637:Ordered alternative

11402:Multiple comparisons

11281:Rao–Blackwellization

11244:Estimating equations

11200:Statistical distance

10918:Factorial experiment

10451:Arithmetic-Geometric

10299:Moving least squares

10238:Approximation theory

10174:Studentized residual

10164:Errors and residuals

10159:Gauss–Markov theorem

10074:Analysis of variance

9996:Nonlinear regression

9975:Segmented regression

9949:General linear model

9867:Confounding variable

9814:Linear least squares

9720:Computer programming

9699:Graph neural network

9274:Text-to-video models

9252:Text-to-image models

9100:Large language model

9085:Scientific computing

8891:Statistical manifold

8886:Information geometry

8765:10.7717/peerj-cs.623

8586:. Free Press, v. 1,

8512:10.2139/ssrn.1406472

8445:, World Scientific.

8441:Chiang, C.L, (2003)

8263:(Twelfth ed.).

7880:Segmented regression

7640:

7617:

7597:

7576:

7556:

7552:is the sample size,

7536:

7503:

7398:Nonlinear regression

7392:Nonlinear regression

7173:

7144:

7115:

7089:

7069:

7043:

7021:

6985:

6956:

6936:

6909:

6889:

6869:

6839:

6817:

6794:

6719:

6531:

6402:

6379:

6370:regression intercept

6345:

6309:

6289:

6269:

6249:

6219:

6088:

6065:

6051:linear least squares

6045:General linear model

6031:confidence intervals

5821:

5701:

5665:

5639:

5601:

5578:

5546:

5469:

5443:

5414:

5394:

5361:

5273:

5119:

5047:

4975:

4933:

4897:

4835:

4735:

4709:

4682:

4650:

4623:

4596:

4569:

4431:

4395:

4284:

4253:

4226:

4199:

4179:

4136:

4084:

4049:

4022:

3954:

3920:

3861:

3808:improve this article

3751:

3717:

3703:linearly independent

3625:

3595:

3569:

3543:

3523:

3490:

3460:

3256:

3128:

3042:

3016:

2911:

2842:

2822:

2777:

2718:

2664:

2624:

2595:

2522:

2490:

2470:

2392:

2372:

2344:

2261:

2183:

2163:

2136:

2109:

2089:

2047:

2013:

1942:

1912:

1881:

1861:

1834:

1799:

1757:

1719:

1692:

1665:

1638:

1536:Gauss–Markov theorem

1506:causal relationships

1395:statistical modeling

1265:Statistical learning

1163:Learning with humans

955:Local outlier factor

404:Gauss–Markov theorem

399:Studentized residual

389:Errors and residuals

223:Principal components

193:Nonlinear regression

80:General linear model

13961:Regression analysis

13857:Carl Rogers Darnall

13852:Samuel Jay Crumbine

13626:Health belief model

13479:Notifiable diseases

13415:Regression analysis

13250:Waterborne diseases

12839:Cultural competence

12764:Regression analysis

12551:Official statistics

12474:Methods engineering

12155:Seasonal adjustment

11923:Poisson regressions

11843:Bayesian regression

11782:Regression analysis

11762:Partial correlation

11734:Regression analysis

11333:Prediction interval

11328:Likelihood interval

11318:Confidence interval

11310:Interval estimation

11271:Unbiased estimators

11089:Model specification

10969:Up-and-down designs

10657:Partial correlation

10613:Index of dispersion

10531:Interquartile range

10317:Statistics category

10248:Gaussian quadrature

10133:Model specification

10100:Stepwise regression

9958:Predictor structure

9895:Total least squares

9877:Regression analysis

9862:Partial correlation

9793:regression analysis

9066:In-context learning

8906:Pattern recognition

8641:. pp. 121–135.

8297:Statistical Science

8267:: Oliver and Boyd.

8055:Statistical Science

7890:Stepwise regression

7865:Prediction interval

7719:quantile regression

7473:prediction interval

7420:Prediction interval

7388:model may be used.

7346:multivariate probit

6005:

5680:{\displaystyle n-2}

5654:{\displaystyle p=1}

5538:This is called the

5495:

5439:is the mean of the

5025:

4507:

4412:

4171:). For example, in

4012:errors-in-variables

3610:{\displaystyle N-k}

3505:{\displaystyle N=2}

3475:{\displaystyle N=2}

3292:

3031:{\displaystyle N=2}

2771:quantile regression

1906:additive error term

1828:regression function

1479:quantile regression

1475:location parameters

1399:regression analysis

1108:Electrochemical RAM

1015:reservoir computing

746:Logistic regression

665:Supervised learning

651:Multimodal learning

626:Feature engineering

571:Generative modeling

533:Rule-based learning

528:Curriculum learning

488:Supervised learning

463:Part of a series on

249:Errors-in-variables

116:Logistic regression

106:Binomial regression

51:Regression analysis

45:Part of a series on

13455:disease prevention

13390:Case–control study

13062:Security of person

12911:Health care reform

12571:Spatial statistics

12451:Medical statistics

12351:First hitting time

12305:Whittle likelihood

11956:Degrees of freedom

11951:Multivariate ANOVA

11884:Heteroscedasticity

11696:Bayesian estimator

11661:Bayesian inference

11510:Kolmogorov–Smirnov

11395:Randomization test

11365:Testing hypotheses

11338:Tolerance interval

11249:Maximum likelihood

11144:Exponential family

11077:Density estimation