398:" to mutate and preferentially replicate high-scoring AI systems, similar to how animals evolved to innately desire certain goals such as finding food. Some AI systems, such as nearest-neighbor, instead of reason by analogy, these systems are not generally given goals, except to the degree that goals are implicit in their training data. Such systems can still be benchmarked if the non-goal system is framed as a system whose "goal" is to accomplish its narrow classification task.

604:

819:

654:

625:

576:

251:

545:

375:, 0 otherwise") or complex ("Perform actions mathematically similar to ones that succeeded in the past"). The "goal function" encapsulates all of the goals the agent is driven to act on; in the case of rational agents, the function also encapsulates the acceptable trade-offs between accomplishing conflicting goals. (Terminology varies; for example, some agents seek to maximize or minimize a "

89:

724:

Intelligent agents can be organized hierarchically into multiple "sub-agents". Intelligent sub-agents process and perform lower-level functions. Taken together, the intelligent agent and sub-agents create a complete system that can accomplish difficult tasks or goals with behaviors and responses that

644:

A rational utility-based agent chooses the action that maximizes the expected utility of the action outcomes - that is, what the agent expects to derive, on average, given the probabilities and utilities of each outcome. A utility-based agent has to model and keep track of its environment, tasks that

426:

can generate intelligent agents that appear to act in ways intended to maximize a "reward function". Sometimes, rather than setting the reward function to be directly equal to the desired benchmark evaluation function, machine learning programmers will use reward shaping to initially give the machine

401:

Systems that are not traditionally considered agents, such as knowledge-representation systems, are sometimes subsumed into the paradigm by framing them as agents that have a goal of (for example) answering questions as accurately as possible; the concept of an "action" is here extended to encompass

728:

Generally, an agent can be constructed by separating the body into the sensors and actuators, and so that it operates with a complex perception system that takes the description of the world as input for a controller and outputs commands to the actuator. However, a hierarchy of controller layers is

661:

Learning has the advantage of allowing agents to initially operate in unknown environments and become more competent than their initial knowledge alone might allow. The most important distinction is between the "learning element", responsible for making improvements, and the "performance element",

338:

More importantly, it has a number of practical advantages that have helped move AI research forward. It provides a reliable and scientific way to test programs; researchers can directly compare or even combine different approaches to isolated problems, by asking which agent is best at maximizing a

435:

chess had a simple objective function; each win counted as +1 point, and each loss counted as -1 point. An objective function for a self-driving car would have to be more complicated. Evolutionary computing can evolve intelligent agents that appear to act in ways intended to maximize a "fitness

583:

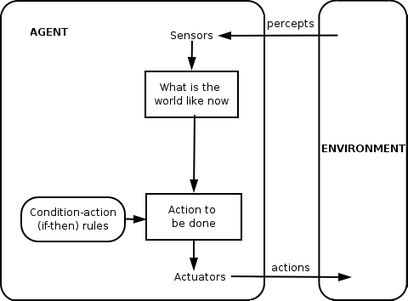

A model-based agent can handle partially observable environments. Its current state is stored inside the agent maintaining some kind of structure that describes the part of the world which cannot be seen. This knowledge about "how the world works" is called a model of the world, hence the name

611:

Goal-based agents further expand on the capabilities of the model-based agents, by using "goal" information. Goal information describes situations that are desirable. This provides the agent a way to choose among multiple possibilities, selecting the one which reaches a goal state. Search and

867:. It simulates traffic interactions between human drivers, pedestrians and automated vehicles. People's behavior is imitated by artificial agents based on data of real human behavior. The basic idea of using agent-based modeling to understand self-driving cars was discussed as early as 2003.

236:

and

Haenlein define artificial intelligence as "a system's ability to correctly interpret external data, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation". This definition is closely related to that of an intelligent agent.

665:

The learning element uses feedback from the "critic" on how the agent is doing and determines how the performance element, or "actor", should be modified to do better in the future. The performance element, previously considered the entire agent, takes in percepts and decides on actions.

591:

that depends on the percept history and thereby reflects at least some of the unobserved aspects of the current state. Percept history and impact of action on the environment can be determined by using the internal model. It then chooses an action in the same way as reflex agent.

217:

Padgham & Winikoff (2005) agree that an intelligent agent is situated in an environment and responds in a timely (though not necessarily real-time) manner to changes in the environment. However, intelligent agents must also proactively pursue goals in a flexible and

96:

Leading AI textbooks define "artificial intelligence" as the "study and design of intelligent agents", a definition that considers goal-directed behavior to be the essence of intelligence. Goal-directed agents are also described using a term borrowed from

519:

We use the term percept to refer to the agent's perceptional inputs at any given instant. In the following figures, an agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators.

406:

of the 2010s, an "encoder"/"generator" component attempts to mimic and improvise human text composition. The generator is attempting to maximize a function encapsulating how well it can fool an antagonistic "predictor"/"discriminator" component.

858:

Hallerbach et al. discussed the application of agent-based approaches for the development and validation of automated driving systems via a digital twin of the vehicle-under-test and microscopic traffic simulation based on independent agents.

402:

the "act" of giving an answer to a question. As an additional extension, mimicry-driven systems can be framed as agents who are optimizing a "goal function" based on how closely the IA succeeds in mimicking the desired behavior. In the

563:

This agent function only succeeds when the environment is fully observable. Some reflex agents can also contain information on their current state which allows them to disregard conditions whose actuators are already triggered.

370:

An agent that is assigned an explicit "goal function" is considered more intelligent if it consistently takes actions that successfully maximize its programmed goal function. The goal can be simple ("1 if the IA wins a game of

447:. In the real world, an IA is constrained by finite time and hardware resources, and scientists compete to produce algorithms that can achieve progressively higher scores on benchmark tests with existing hardware.

459:

f (called the "agent function") which maps every possible percepts sequence to a possible action the agent can perform or to a coefficient, feedback element, function or constant that affects eventual actions:

1356:

Adams, Sam; Arel, Itmar; Bach, Joscha; Coop, Robert; Furlan, Rod; Goertzel, Ben; Hall, J. Storrs; Samsonovich, Alexei; Scheutz, Matthias; Schlesinger, Matthew; Shapiro, Stuart C.; Sowa, John (15 March 2012).

335:"). It also doesn't attempt to draw a sharp dividing line between behaviors that are "intelligent" and behaviors that are "unintelligent"—programs need only be measured in terms of their objective function.

632:

Goal-based agents only distinguish between goal states and non-goal states. It is also possible to define a measure of how desirable a particular state is. This measure can be obtained through the use of a

567:

Infinite loops are often unavoidable for simple reflex agents operating in partially observable environments. If the agent can randomize its actions, it may be possible to escape from infinite loops.

693:

Layered architectures – in which decision-making is realized via various software layers, each of which is more or less explicitly reasoning about the environment at different levels of abstraction

1177:

Kaplan, Andreas; Haenlein, Michael (1 January 2019). "Siri, Siri, in my hand: Who's the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence".

690:

Belief-desire-intention agents – in which decision making depends upon the manipulation of data structures representing the beliefs, desires, and intentions of the agent; and finally,

500:

1308:

Andrew Y. Ng, Daishi Harada, and Stuart

Russell. "Policy invariance under reward transformations: Theory and application to reward shaping." In ICML, vol. 99, pp. 278-287. 1999.

108:

An agent has an "objective function" that encapsulates all the IA's goals. Such an agent is designed to create and execute whatever plan will, upon completion, maximize the

160:

Intelligent agents are often described schematically as an abstract functional system similar to a computer program. Abstract descriptions of intelligent agents are called

1507:

Stefano

Albrecht and Peter Stone (2018). Autonomous Agents Modelling Other Agents: A Comprehensive Survey and Open Problems. Artificial Intelligence, Vol. 258, pp. 66-95.

1606:"1.3 Agents Situated in Environments‣ Chapter 2 Agent Architectures and Hierarchical Control‣ Artificial Intelligence: Foundations of Computational Agents, 2nd Edition"

669:

The last component of the learning agent is the "problem generator". It is responsible for suggesting actions that will lead to new and informative experiences.

1631:

1709:

641:

of different world states according to how well they satisfied the agent's goals. The term utility can be used to describe how "happy" the agent is.

741:". Some 20th-century definitions characterize an agent as a program that aids a user or that acts on behalf of a user. These examples are known as

1330:

1247:

Lindenbaum, M., Markovitch, S., & Rusakov, D. (2004). Selective sampling for nearest neighbor classifiers. Machine learning, 54(2), 125–152.

227:

431:

stated in 2018, "Most of the learning algorithms that people have come up with essentially consist of minimizing some objective function."

315:, it does not refer to human intelligence in any way. Thus, there is no need to discuss if it is "real" vs "simulated" intelligence (i.e.,

268:

882:

745:, and sometimes an "intelligent software agent" (that is, a software agent with intelligence) is referred to as an "intelligent agent".

1284:

1257:

1077:

189:

829:

729:

often necessary to balance the immediate reaction desired for low-level tasks and the slow reasoning about complex, high-level goals.

1870:

1853:

394:" that encourages some types of behavior and punishes others. Alternatively, an evolutionary system can induce goals by using a "

1167:

Lin

Padgham and Michael Winikoff. Developing intelligent agent systems: A practical guide. Vol. 13. John Wiley & Sons, 2005.

1655:

Burgin, Mark, and

Gordana Dodig-Crnkovic. "A systematic approach to artificial agents." arXiv preprint arXiv:0902.3513 (2009).

197:"Anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators"

1896:

1439:

1391:

205:"An agent that acts so as to maximize the expected value of a performance measure based on past experience and knowledge."

128:

505:

Agent function is an abstract concept as it could incorporate various principles of decision making like calculation of

1814:

1842:

1770:

638:

613:

553:

298:

280:

1927:

924:

687:

Reactive agents – in which decision making is implemented in some form of direct mapping from situation to action.

938:

616:

are the subfields of artificial intelligence devoted to finding action sequences that achieve the agent's goals.

403:

1521:

1058:

637:

which maps a state to a measure of the utility of the state. A more general performance measure should allow a

261:

1734:

Connors, J.; Graham, S.; Mailloux, L. (2018). "Cyber

Synthetic Modeling for Vehicle-to-Vehicle Applications".

466:

172:

is designed to function in the absence of human intervention. Intelligent agents are also closely related to

1014:

The

Padgham & Winikoff definition explicitly covers only social agents that interact with other agents.

839:

801:

311:

Philosophically, this definition of artificial intelligence avoids several lines of criticism. Unlike the

1320:. Architects of Intelligence: The truth about AI from the people building it. Packt Publishing Ltd, 2018.

595:

An agent may also use models to describe and predict the behaviors of other agents in the environment.

684:

Logic-based agents – in which the decision about what action to perform is made via logical deduction.

339:

given "goal function". It also gives them a common language to communicate with other fields—such as

943:

415:

340:

116:

agent has a "reward function" that allows the programmers to shape the IA's desired behavior, and an

58:

958:

953:

276:

27:

973:

892:

645:

have involved a great deal of research on perception, representation, reasoning, and learning.

536:

group agents into five classes based on their degree of perceived intelligence and capability:

456:

423:

419:

387:

316:

117:

113:

1679:"Simulation-Based Identification of Critical Scenarios for Cooperative and Automated Vehicles"

967:

1803:

The Master

Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World

1755:

Proceedings of the 2003 IEEE International

Conference on Intelligent Transportation Systems

1558:

1317:

877:

272:

150:

20:

8:

948:

835:

768:

1562:

1875:

1776:

1194:

1122:

963:

921:– the ability for agents to search heterogeneous data sources using a single vocabulary

719:

46:

1912:

1892:

1879:

1838:

1824:

1810:

1780:

1766:

1678:

1586:

1478:

1473:

1456:

1435:

1198:

984:

738:

332:

154:

144:

132:

124:

414:

systems often accept an explicit goal function, the paradigm can also be applied to

1865:

1758:

1690:

1605:

1576:

1571:

1566:

1546:

1468:

1370:

1186:

1126:

1114:

918:

887:

864:

863:

has created a multi-agent simulation environment, Carcraft, to test algorithms for

794:

788:

764:

737:"Intelligent agent" is also often used as a vague term, sometimes synonymous with "

395:

376:

360:

140:

54:

1190:

897:

757:

443:

was proposed as a maximally intelligent agent in this paradigm. However, AIXI is

391:

147:

88:

1798:

1750:

1508:

989:

913:

781:

749:

742:

603:

556:, ignoring the rest of the percept history. The agent function is based on the

436:

function" that influences how many descendants each agent is allowed to leave.

348:

328:

233:

223:

173:

109:

102:

78:

66:

31:

1762:

1133:

319:

vs "artificial" intelligence) and does not indicate that such a machine has a

1921:

1482:

1375:

1358:

380:

364:

324:

1429:

653:

624:

1828:

1590:

994:

978:

774:

707:

588:

575:

444:

1837:(2nd ed.). Upper Saddle River, New Jersey: Prentice Hall. Chapter 2.

1118:

1806:

1694:

1331:"Why AlphaZero's Artificial Intelligence Has Trouble With the Real World"

933:

929:

903:

510:

411:

386:

Goals can be explicitly defined or induced. If the AI is programmed for "

312:

70:

1871:

10.1002/(SICI)1098-111X(199806)13:6<453::AID-INT1>3.0.CO;2-K

1581:

1258:"Generative adversarial networks: What GANs are and how they've evolved"

908:

428:

177:

62:

50:

1632:"Competing For The Future With Intelligent Agents... And A Confession"

1359:"Mapping the Landscape of Human-Level Artificial General Intelligence"

544:

516:

The program agent, instead, maps every possible percept to an action.

981:– making data on the Web available for automated processing by agents

432:

372:

344:

123:

Intelligent agents in artificial intelligence are closely related to

98:

283:. Statements consisting only of original research should be removed.

209:

It also defines the field of "artificial intelligence research" as:

1033:

1031:

702:

In 2013, Alexander

Wissner-Gross published a theory pertaining to

1057:

Bringsjord, Selmer; Govindarajulu, Naveen Sundar (12 July 2018).

703:

506:

168:) to distinguish them from their real-world implementations. An

131:, and versions of the intelligent agent paradigm are studied in

53:

in order to achieve goals, and may improve its performance with

1832:

1285:"Artificial Intelligence Will Do What We Ask. That's a Problem"

1105:

Bull, Larry (1999). "On model-based evolutionary computation".

1078:"Artificial Intelligence Will Do What We Ask. That's a Problem"

1028:

804:

to represent short- and long-term memory, age, forgetting, etc.

219:

1683:

SAE International Journal of Connected and Automated Vehicles

1229:

1217:

1063:

The Stanford Encyclopedia of Philosophy (Summer 2020 Edition)

860:

183:

180:

computer program that carries out tasks on behalf of users).

136:

82:

1710:"Inside Waymo's Secret World for Training Self-Driving Cars"

780:

Learn and improve through interaction with the environment (

1205:

752:, IA systems should exhibit the following characteristics:

440:

320:

74:

1749:

Yang, Guoqing; Wu, Zhaohui; Li, Xiumei; Chen, Wei (2003).

1676:

552:

Simple reflex agents act only on the basis of the current

455:

A simple agent program can be defined mathematically as a

69:

is considered an example of an intelligent agent, as is a

1736:

In International Conference on Cyber Warfare and Security

1677:

Hallerbach, S.; Xia, Y.; Eberle, U.; Koester, F. (2018).

587:

A model-based reflex agent should maintain some sort of

73:, as is any system that meets the definition, such as a

1056:

1392:"Eye-catching advances in some AI fields are not real"

528:

1751:"SVE: embedded agent based smart vehicle environment"

1738:. Academic Conferences International Limited: 594-XI.

1733:

469:

1854:"Introduction: Hybrid intelligent adaptive systems"

1658:

732:

509:of individual options, deduction over logic rules,

222:way. Optional desiderata include that the agent be

61:. An intelligent agent may be simple or complex: A

1544:

1100:

1098:

494:

1545:Wissner-Gross, A. D.; Freer, C. E. (2013-04-19).

1355:

1919:

523:

120:'s behavior is shaped by a "fitness function".

37:In intelligence and artificial intelligence, an

1095:

1434:. Repin: Bruckner Publishing. pp. 42–59.

1176:

427:rewards for incremental progress in learning.

1823:

1495:

1415:

1211:

1156:

1139:

1069:

1037:

832:to certain ideas, incidents, or controversies

533:

1858:International Journal of Intelligent Systems

1748:

1509:https://doi.org/10.1016/j.artint.2018.01.002

1457:"Artificial intelligence: A modern approach"

662:responsible for selecting external actions.

570:

1421:

883:Artificial intelligence systems integration

1891:(2nd ed.). Cambridge, MA: MIT Press.

1834:Artificial Intelligence: A Modern Approach

1603:

842:this issue before removing this message.

343:(which is defined in terms of "goals") or

190:Artificial Intelligence: A Modern Approach

184:As a definition of artificial intelligence

112:of the objective function. For example, a

1869:

1580:

1570:

1472:

1374:

1282:

1075:

672:

299:Learn how and when to remove this message

213:"The study and design of rational agents"

1797:

1235:

1223:

777:in terms of behavior, error and success.

713:

652:

623:

602:

574:

543:

495:{\displaystyle f:P^{\ast }\rightarrow A}

331:(i.e., it does not imply John Searle's "

87:

19:For the term in intelligent design, see

1851:

1664:

1454:

1427:

1052:

1050:

1048:

1046:

619:

539:

1920:

1629:

1522:"A Universal Formula for Intelligence"

1389:

1152:

1150:

1148:

1076:Wolchover, Natalie (30 January 2020).

900:– a practical field for implementation

347:(which uses the same definition of a "

16:Software agent which acts autonomously

1886:

677:

354:

1707:

1520:Box, Geeks out of the (2019-12-04).

1104:

1043:

1021:

812:

787:Learn quickly from large amounts of

598:

267:Relevant discussion may be found on

244:

1791:

1757:. Vol. 2. pp. 1745–1749.

1519:

1283:Wolchover, Natalie (January 2020).

1145:

836:create a more balanced presentation

529:Russell and Norvig's classification

226:, and that the agent be capable of

13:

1142:, pp. 4–5, 32, 35, 36 and 56.

648:

201:It defines a "rational agent" as:

14:

1939:

1906:

450:

925:Friendly artificial intelligence

817:

733:Alternative definitions and uses

725:display a form of intelligence.

680:defines four classes of agents:

628:Model-based, utility-based agent

249:

1742:

1727:

1701:

1670:

1649:

1623:

1604:Poole, David; Mackworth, Alan.

1597:

1538:

1513:

1501:

1489:

1455:Nilsson, Nils J. (April 1996).

1448:

1409:

1390:Hutson, Matthew (27 May 2020).

1383:

1349:

1323:

1311:

1302:

1276:

1250:

1241:

939:GOAL agent programming language

932:– IA implemented with adaptive

808:

560:: "if condition, then action".

404:generative adversarial networks

1572:10.1103/PhysRevLett.110.168702

1170:

1161:

1008:

486:

439:The mathematical formalism of

1:

1708:Madrigal, Story by Alexis C.

970:– multiple interactive agents

607:Model-based, goal-based agent

524:Classes of intelligent agents

379:", "objective function", or "

240:

1689:(2). SAE International: 93.

1474:10.1016/0004-3702(96)00007-0

1431:Design of Agent-Based Models

1191:10.1016/j.bushor.2018.08.004

1061:. In Edward N. Zalta (ed.).

170:autonomous intelligent agent

7:

1157:Russell & Norvig (2003)

870:

793:Have memory-based exemplar

534:Russell & Norvig (2003)

279:the claims made and adding

162:abstract intelligent agents

92:Simple reflex agent diagram

10:

1944:

739:virtual personal assistant

717:

358:

25:

18:

1763:10.1109/ITSC.2003.1252782

1496:Russell & Norvig 2003

1416:Russell & Norvig 2003

1212:Russell & Norvig 2003

1140:Russell & Norvig 2003

1059:"Artificial Intelligence"

1038:Russell & Norvig 2003

944:Hybrid intelligent system

571:Model-based reflex agents

341:mathematical optimization

47:perceives its environment

1547:"Causal Entropic Forces"

1376:10.1609/aimag.v33i1.2322

1001:

797:and retrieval capacities

710:for intelligent agents.

697:

657:A general learning agent

579:Model-based reflex agent

139:, and the philosophy of

26:Not to be confused with

1928:Artificial intelligence

1551:Physical Review Letters

1461:Artificial Intelligence

1428:Salamon, Tomas (2011).

959:JACK Intelligent Agents

893:Cognitive architectures

228:belief-desire-intention

193:defines an "agent" as

28:Artificial intelligence

1801:(September 22, 2015).

1630:Fingar, Peter (2018).

974:Reinforcement learning

673:Weiss's classification

658:

629:

608:

580:

549:

496:

424:Reinforcement learning

420:evolutionary computing

388:reinforcement learning

269:Talk:Intelligent agent

215:

207:

199:

118:evolutionary algorithm

114:reinforcement learning

93:

1119:10.1007/s005000050055

968:multiple-agent system

714:Hierarchies of agents

656:

627:

606:

584:"model-based agent".

578:

558:condition-action rule

547:

497:

359:Further information:

211:

203:

195:

143:, as well as in many

91:

1852:Kasabov, N. (1998).

1695:10.4271/2018-01-1066

1526:Geeks out of the box

878:Ambient intelligence

773:Are able to analyze

620:Utility-based agents

540:Simple reflex agents

467:

363:(economics) and

333:strong AI hypothesis

21:Intelligent designer

1563:2013PhRvL.110p8702W

949:Intelligent control

760:rules incrementally

548:Simple reflex agent

45:) is an agent that

1889:Multiagent systems

1887:Weiss, G. (2013).

1825:Russell, Stuart J.

1264:. 26 December 2019

964:Multi-agent system

954:Intelligent system

720:Multi-agent system

659:

630:

609:

581:

550:

492:

355:Objective function

260:possibly contains

155:social simulations

94:

1898:978-0-262-01889-0

1441:978-80-904661-1-1

1179:Business Horizons

1022:Inline references

985:Social simulation

865:self-driving cars

856:

855:

834:. Please help to

826:This section may

599:Goal-based agents

309:

308:

301:

262:original research

145:interdisciplinary

133:cognitive science

39:intelligent agent

1935:

1902:

1883:

1873:

1848:

1820:

1792:Other references

1785:

1784:

1746:

1740:

1739:

1731:

1725:

1724:

1722:

1720:

1705:

1699:

1698:

1674:

1668:

1662:

1656:

1653:

1647:

1646:

1644:

1642:

1627:

1621:

1620:

1618:

1616:

1601:

1595:

1594:

1584:

1574:

1542:

1536:

1535:

1533:

1532:

1517:

1511:

1505:

1499:

1498:, pp. 46–54

1493:

1487:

1486:

1476:

1467:(1–2): 369–380.

1452:

1446:

1445:

1425:

1419:

1413:

1407:

1406:

1404:

1402:

1387:

1381:

1380:

1378:

1353:

1347:

1346:

1344:

1342:

1327:

1321:

1315:

1309:

1306:

1300:

1299:

1297:

1295:

1280:

1274:

1273:

1271:

1269:

1254:

1248:

1245:

1239:

1233:

1227:

1221:

1215:

1209:

1203:

1202:

1174:

1168:

1165:

1159:

1154:

1143:

1137:

1131:

1130:

1102:

1093:

1092:

1090:

1088:

1073:

1067:

1066:

1054:

1041:

1035:

1015:

1012:

919:Federated search

888:Autonomous agent

851:

848:

821:

820:

813:

756:Accommodate new

635:utility function

501:

499:

498:

493:

485:

484:

396:fitness function

377:utility function

361:utility function

304:

297:

293:

290:

284:

281:inline citations

253:

252:

245:

141:practical reason

49:, takes actions

1943:

1942:

1938:

1937:

1936:

1934:

1933:

1932:

1918:

1917:

1909:

1899:

1845:

1817:

1799:Domingos, Pedro

1794:

1789:

1788:

1773:

1747:

1743:

1732:

1728:

1718:

1716:

1706:

1702:

1675:

1671:

1663:

1659:

1654:

1650:

1640:

1638:

1628:

1624:

1614:

1612:

1602:

1598:

1543:

1539:

1530:

1528:

1518:

1514:

1506:

1502:

1494:

1490:

1453:

1449:

1442:

1426:

1422:

1414:

1410:

1400:

1398:

1388:

1384:

1354:

1350:

1340:

1338:

1335:Quanta Magazine

1329:

1328:

1324:

1316:

1312:

1307:

1303:

1293:

1291:

1289:Quanta Magazine

1281:

1277:

1267:

1265:

1256:

1255:

1251:

1246:

1242:

1234:

1230:

1222:

1218:

1210:

1206:

1175:

1171:

1166:

1162:

1155:

1146:

1138:

1134:

1103:

1096:

1086:

1084:

1082:Quanta Magazine

1074:

1070:

1055:

1044:

1036:

1029:

1024:

1019:

1018:

1013:

1009:

1004:

999:

898:Cognitive radio

873:

852:

846:

843:

822:

818:

811:

758:problem solving

743:software agents

735:

722:

716:

700:

675:

651:

649:Learning agents

622:

601:

573:

542:

531:

526:

480:

476:

468:

465:

464:

453:

416:neural networks

392:reward function

368:

357:

305:

294:

288:

285:

266:

254:

250:

243:

186:

174:software agents

148:socio-cognitive

35:

24:

17:

12:

11:

5:

1941:

1931:

1930:

1916:

1915:

1908:

1907:External links

1905:

1904:

1903:

1897:

1884:

1864:(6): 453–454.

1849:

1843:

1821:

1816:978-0465065707

1815:

1793:

1790:

1787:

1786:

1771:

1741:

1726:

1700:

1669:

1657:

1648:

1622:

1596:

1557:(16): 168702.

1537:

1512:

1500:

1488:

1447:

1440:

1420:

1408:

1396:Science | AAAS

1382:

1348:

1322:

1310:

1301:

1275:

1249:

1240:

1228:

1216:

1204:

1169:

1160:

1144:

1132:

1107:Soft Computing

1094:

1068:

1042:

1026:

1025:

1023:

1020:

1017:

1016:

1006:

1005:

1003:

1000:

998:

997:

992:

990:Software agent

987:

982:

976:

971:

961:

956:

951:

946:

941:

936:

927:

922:

916:

914:Embodied agent

911:

906:

901:

895:

890:

885:

880:

874:

872:

869:

854:

853:

847:September 2023

838:. Discuss and

825:

823:

816:

810:

807:

806:

805:

798:

791:

785:

778:

771:

761:

750:Nikola Kasabov

734:

731:

718:Main article:

715:

712:

699:

696:

695:

694:

691:

688:

685:

674:

671:

650:

647:

621:

618:

600:

597:

589:internal model

572:

569:

541:

538:

530:

527:

525:

522:

503:

502:

491:

488:

483:

479:

475:

472:

452:

451:Agent function

449:

356:

353:

349:rational agent

307:

306:

257:

255:

248:

242:

239:

185:

182:

110:expected value

103:rational agent

67:control system

32:Embodied agent

15:

9:

6:

4:

3:

2:

1940:

1929:

1926:

1925:

1923:

1914:

1911:

1910:

1900:

1894:

1890:

1885:

1881:

1877:

1872:

1867:

1863:

1859:

1855:

1850:

1846:

1844:0-13-790395-2

1840:

1836:

1835:

1830:

1829:Norvig, Peter

1826:

1822:

1818:

1812:

1808:

1804:

1800:

1796:

1795:

1782:

1778:

1774:

1772:0-7803-8125-4

1768:

1764:

1760:

1756:

1752:

1745:

1737:

1730:

1715:

1711:

1704:

1696:

1692:

1688:

1684:

1680:

1673:

1666:

1661:

1652:

1637:

1633:

1626:

1611:

1607:

1600:

1592:

1588:

1583:

1578:

1573:

1568:

1564:

1560:

1556:

1552:

1548:

1541:

1527:

1523:

1516:

1510:

1504:

1497:

1492:

1484:

1480:

1475:

1470:

1466:

1462:

1458:

1451:

1443:

1437:

1433:

1432:

1424:

1417:

1412:

1397:

1393:

1386:

1377:

1372:

1368:

1364:

1360:

1352:

1336:

1332:

1326:

1319:

1314:

1305:

1290:

1286:

1279:

1263:

1259:

1253:

1244:

1237:

1236:Domingos 2015

1232:

1225:

1224:Domingos 2015

1220:

1214:, p. 27.

1213:

1208:

1200:

1196:

1192:

1188:

1184:

1180:

1173:

1164:

1158:

1153:

1151:

1149:

1141:

1136:

1128:

1124:

1120:

1116:

1112:

1108:

1101:

1099:

1083:

1079:

1072:

1064:

1060:

1053:

1051:

1049:

1047:

1039:

1034:

1032:

1027:

1011:

1007:

996:

993:

991:

988:

986:

983:

980:

977:

975:

972:

969:

965:

962:

960:

957:

955:

952:

950:

947:

945:

942:

940:

937:

935:

931:

928:

926:

923:

920:

917:

915:

912:

910:

907:

905:

902:

899:

896:

894:

891:

889:

886:

884:

881:

879:

876:

875:

868:

866:

862:

850:

841:

837:

833:

831:

824:

815:

814:

803:

799:

796:

792:

790:

786:

783:

779:

776:

772:

770:

766:

762:

759:

755:

754:

753:

751:

748:According to

746:

744:

740:

730:

726:

721:

711:

709:

705:

692:

689:

686:

683:

682:

681:

679:

670:

667:

663:

655:

646:

642:

640:

636:

626:

617:

615:

605:

596:

593:

590:

585:

577:

568:

565:

561:

559:

555:

546:

537:

535:

521:

517:

514:

512:

508:

489:

481:

477:

473:

470:

463:

462:

461:

458:

448:

446:

442:

437:

434:

430:

425:

421:

417:

413:

408:

405:

399:

397:

393:

390:", it has a "

389:

384:

382:

381:loss function

378:

374:

367:(mathematics)

366:

365:loss function

362:

352:

350:

346:

342:

336:

334:

330:

329:understanding

326:

325:consciousness

322:

318:

314:

303:

300:

292:

289:February 2023

282:

278:

274:

270:

264:

263:

258:This section

256:

247:

246:

238:

235:

231:

229:

225:

221:

214:

210:

206:

202:

198:

194:

192:

191:

181:

179:

175:

171:

167:

163:

158:

156:

153:and computer

152:

149:

146:

142:

138:

134:

130:

126:

121:

119:

115:

111:

106:

104:

100:

90:

86:

84:

80:

76:

72:

68:

64:

60:

57:or acquiring

56:

52:

48:

44:

40:

33:

29:

22:

1888:

1861:

1857:

1833:

1802:

1754:

1744:

1735:

1729:

1717:. Retrieved

1714:The Atlantic

1713:

1703:

1686:

1682:

1672:

1665:Kasabov 1998

1660:

1651:

1639:. Retrieved

1636:Forbes Sites

1635:

1625:

1613:. Retrieved

1609:

1599:

1582:1721.1/79750

1554:

1550:

1540:

1529:. Retrieved

1525:

1515:

1503:

1491:

1464:

1460:

1450:

1430:

1423:

1418:, p. 33

1411:

1399:. Retrieved

1395:

1385:

1366:

1362:

1351:

1339:. Retrieved

1334:

1325:

1313:

1304:

1292:. Retrieved

1288:

1278:

1266:. Retrieved

1261:

1252:

1243:

1238:, Chapter 7.

1231:

1226:, Chapter 5.

1219:

1207:

1185:(1): 15–25.

1182:

1178:

1172:

1163:

1135:

1113:(2): 76–82.

1110:

1106:

1085:. Retrieved

1081:

1071:

1062:

1010:

995:Software bot

979:Semantic Web

930:Fuzzy agents

857:

844:

830:undue weight

827:

809:Applications

747:

736:

727:

723:

708:Intelligence

701:

678:Weiss (2013)

676:

668:

664:

660:

643:

634:

631:

610:

594:

586:

582:

566:

562:

557:

551:

532:

518:

515:

504:

454:

445:uncomputable

438:

409:

400:

385:

369:

337:

310:

295:

286:

259:

232:

216:

212:

208:

204:

200:

196:

188:

187:

169:

165:

161:

159:

122:

107:

95:

51:autonomously

42:

38:

36:

1807:Basic Books

1615:28 November

1610:artint.info

1363:AI Magazine

1318:Martin Ford

1262:VentureBeat

934:fuzzy logic

904:Cybernetics

511:fuzzy logic

412:symbolic AI

317:"synthetic"

313:Turing test

71:human being

1531:2022-10-11

1040:, chpt. 2.

909:DAYDREAMER

802:parameters

782:embodiment

775:themselves

639:comparison

429:Yann LeCun

273:improve it

241:Advantages

230:analysis.

178:autonomous

63:thermostat

1880:120318478

1781:110177067

1719:14 August

1483:0004-3702

1369:(1): 25.

1199:158433736

769:real time

487:→

482:∗

433:AlphaZero

345:economics

277:verifying

271:. Please

129:economics

99:economics

65:or other

59:knowledge

1922:Category

1913:Coneural

1831:(2003).

1591:23679649

871:See also

614:planning

457:function

327:or true

224:rational

151:modeling

55:learning

30:(AI) or

1641:18 June

1559:Bibcode

1401:18 June

1341:18 June

1294:18 June

1268:18 June

1127:9699920

1087:21 June

840:resolve

795:storage

767:and in

704:Freedom

554:percept

513:, etc.

507:utility

418:and to

81:, or a

1895:

1878:

1841:

1813:

1779:

1769:

1589:

1481:

1438:

1337:. 2018

1197:

1125:

765:online

763:Adapt

410:While

234:Kaplan

220:robust

137:ethics

125:agents

1876:S2CID

1777:S2CID

1195:S2CID

1123:S2CID

1002:Notes

861:Waymo

828:lend

800:Have

698:Other

83:biome

79:state

1893:ISBN

1839:ISBN

1811:ISBN

1767:ISBN

1721:2020

1643:2020

1617:2018

1587:PMID

1479:ISSN

1436:ISBN

1403:2020

1343:2020

1296:2020

1270:2020

1089:2020

966:and

789:data

706:and

441:AIXI

383:".)

351:").

321:mind

176:(an

77:, a

75:firm

1866:doi

1759:doi

1691:doi

1577:hdl

1567:doi

1555:110

1469:doi

1371:doi

1187:doi

1115:doi

275:by

166:AIA

127:in

105:".

101:, "

1924::

1874:.

1862:13

1860:.

1856:.

1827:;

1809:.

1805:.

1775:.

1765:.

1753:.

1712:.

1685:.

1681:.

1634:.

1608:.

1585:.

1575:.

1565:.

1553:.

1549:.

1524:.

1477:.

1465:82

1463:.

1459:.

1394:.

1367:33

1365:.

1361:.

1333:.

1287:.

1260:.

1193:.

1183:62

1181:.

1147:^

1121:.

1109:.

1097:^

1080:.

1045:^

1030:^

422:.

373:Go

323:,

157:.

135:,

85:.

43:IA

1901:.

1882:.

1868::

1847:.

1819:.

1783:.

1761::

1723:.

1697:.

1693::

1687:1

1667:.

1645:.

1619:.

1593:.

1579::

1569::

1561::

1534:.

1485:.

1471::

1444:.

1405:.

1379:.

1373::

1345:.

1298:.

1272:.

1201:.

1189::

1129:.

1117::

1111:3

1091:.

1065:.

849:)

845:(

784:)

490:A

478:P

474::

471:f

302:)

296:(

291:)

287:(

265:.

164:(

41:(

34:.

23:.

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.