244:", and "distributed computing" have much overlap, and no clear distinction exists between them. The same system may be characterized both as "parallel" and "distributed"; the processors in a typical distributed system run concurrently in parallel. Parallel computing may be seen as a particularly tightly coupled form of distributed computing, and distributed computing may be seen as a loosely coupled form of parallel computing. Nevertheless, it is possible to roughly classify concurrent systems as "parallel" or "distributed" using the following criteria:

227:

4651:

3887:

264:

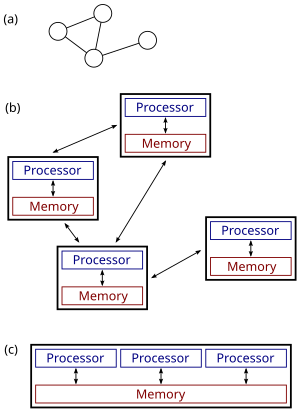

Figure (b) shows the same distributed system in more detail: each computer has its own local memory, and information can be exchanged only by passing messages from one node to another by using the available communication links. Figure (c) shows a parallel system in which each processor has a direct access to a shared memory.

958:, all nodes in parallel (1) receive the latest messages from their neighbours, (2) perform arbitrary local computation, and (3) send new messages to their neighbors. In such systems, a central complexity measure is the number of synchronous communication rounds required to complete the task.

1168:

However, there are many interesting special cases that are decidable. In particular, it is possible to reason about the behaviour of a network of finite-state machines. One example is telling whether a given network of interacting (asynchronous and non-deterministic) finite-state machines can reach a

1548:

Distributed programs are abstract descriptions of distributed systems. A distributed program consists of a collection of processes that work concurrently and communicate by explicit message passing. Each process can access a set of variables which are disjoint from the variables that can be changed

1104:

as the organizer of some task distributed among several computers (nodes). Before the task is begun, all network nodes are either unaware which node will serve as the "coordinator" (or leader) of the task, or unable to communicate with the current coordinator. After a coordinator election algorithm

953:

In the analysis of distributed algorithms, more attention is usually paid on communication operations than computational steps. Perhaps the simplest model of distributed computing is a synchronous system where all nodes operate in a lockstep fashion. This model is commonly known as the LOCAL model.

345:

Various hardware and software architectures are used for distributed computing. At a lower level, it is necessary to interconnect multiple CPUs with some sort of network, regardless of whether that network is printed onto a circuit board or made up of loosely coupled devices and cables. At a higher

169:

A distributed system may have a common goal, such as solving a large computational problem; the user then perceives the collection of autonomous processors as a unit. Alternatively, each computer may have its own user with individual needs, and the purpose of the distributed system is to coordinate

680:

According to

Reactive Manifesto, reactive distributed systems are responsive, resilient, elastic and message-driven. Subsequently, Reactive systems are more flexible, loosely-coupled and scalable. To make your systems reactive, you are advised to implement Reactive Principles. Reactive Principles

1137:

In order to perform coordination, distributed systems employ the concept of coordinators. The coordinator election problem is to choose a process from among a group of processes on different processors in a distributed system to act as the central coordinator. Several central coordinator election

928:

Moreover, a parallel algorithm can be implemented either in a parallel system (using shared memory) or in a distributed system (using message passing). The traditional boundary between parallel and distributed algorithms (choose a suitable network vs. run in any given network) does not lie in the

754:

The field of concurrent and distributed computing studies similar questions in the case of either multiple computers, or a computer that executes a network of interacting processes: which computational problems can be solved in such a network and how efficiently? However, it is not at all obvious

263:

The figure on the right illustrates the difference between distributed and parallel systems. Figure (a) is a schematic view of a typical distributed system; the system is represented as a network topology in which each node is a computer and each line connecting the nodes is a communication link.

1108:

The network nodes communicate among themselves in order to decide which of them will get into the "coordinator" state. For that, they need some method in order to break the symmetry among them. For example, if each node has unique and comparable identities, then the nodes can compare their

988:

communication rounds, then the nodes in the network must produce their output without having the possibility to obtain information about distant parts of the network. In other words, the nodes must make globally consistent decisions based on information that is available in their

441:: architectures where there are no special machines that provide a service or manage the network resources. Instead all responsibilities are uniformly divided among all machines, known as peers. Peers can serve both as clients and as servers. Examples of this architecture include

937:

In parallel algorithms, yet another resource in addition to time and space is the number of computers. Indeed, often there is a trade-off between the running time and the number of computers: the problem can be solved faster if there are more computers running in parallel (see

1013:

Traditional computational problems take the perspective that the user asks a question, a computer (or a distributed system) processes the question, then produces an answer and stops. However, there are also problems where the system is required not to stop, including the

1134:, such as undirected rings, unidirectional rings, complete graphs, grids, directed Euler graphs, and others. A general method that decouples the issue of the graph family from the design of the coordinator election algorithm was suggested by Korach, Kutten, and Moran.

470:. Database-centric architecture in particular provides relational processing analytics in a schematic architecture allowing for live environment relay. This enables distributed computing functions both within and beyond the parameters of a networked database.

997:

rounds, and understanding which problems can be solved by such algorithms is one of the central research questions of the field. Typically an algorithm which solves a problem in polylogarithmic time in the network size is considered efficient in this model.

453:

Another basic aspect of distributed computing architecture is the method of communicating and coordinating work among concurrent processes. Through various message passing protocols, processes may communicate directly with one another, typically in a

279:

for more detailed discussion). Nevertheless, as a rule of thumb, high-performance parallel computation in a shared-memory multiprocessor uses parallel algorithms while the coordination of a large-scale distributed system uses distributed algorithms.

184:

The structure of the system (network topology, network latency, number of computers) is not known in advance, the system may consist of different kinds of computers and network links, and the system may change during the execution of a distributed

755:

what is meant by "solving a problem" in the case of a concurrent or distributed system: for example, what is the task of the algorithm designer, and what is the concurrent or distributed equivalent of a sequential general-purpose computer?

76:. Distributed systems cost significantly more than monolithic architectures, primarily due to increased needs for additional hardware, servers, gateways, firewalls, new subnets, proxies, and so on. Also, distributed systems are prone to

1442:

Systems consist of a number of physically distributed components that work independently using their private storage, but also communicate from time to time by explicit message passing. Such systems are called distributed

812:

are used. A Boolean circuit can be seen as a computer network: each gate is a computer that runs an extremely simple computer program. Similarly, a sorting network can be seen as a computer network: each comparator is a

133:" originally referred to computer networks where individual computers were physically distributed within some geographical area. The terms are nowadays used in a much wider sense, even referring to autonomous

780:

Shared-memory programs can be extended to distributed systems if the underlying operating system encapsulates the communication between nodes and virtually unifies the memory across all individual systems.

2069:

Ohlídal, M.; Jaroš, J.; Schwarz, J.; et al. (2006). "Evolutionary Design of OAB and AAB Communication

Schedules for Interconnection Networks". In Rothlauf, F.; Branke, J.; Cagnoni, S. (eds.).

1123:

transmitted, and time. The algorithm suggested by

Gallager, Humblet, and Spira for general undirected graphs has had a strong impact on the design of distributed algorithms in general, and won the

431:: architectures that refer typically to web applications which further forward their requests to other enterprise services. This type of application is the one most responsible for the success of

412:: architectures where smart clients contact the server for data then format and display it to the users. Input at the client is committed back to the server when it represents a permanent change.

2985:

1161:

is an analogous example from the field of centralised computation: we are given a computer program and the task is to decide whether it halts or runs forever. The halting problem is

867:

is encoded as a string, and the string is given as input to a computer. The computer program finds a coloring of the graph, encodes the coloring as a string, and outputs the result.

1005:). The features of this concept are typically captured with the CONGEST(B) model, which is similarly defined as the LOCAL model, but where single messages can only contain B bits.

881:

is encoded as a string. However, multiple computers can access the same string in parallel. Each computer might focus on one part of the graph and produce a coloring for that part.

2955:

1173:, i.e., it is decidable, but not likely that there is an efficient (centralised, parallel or distributed) algorithm that solves the problem in the case of large networks.

921:

While the field of parallel algorithms has a different focus than the field of distributed algorithms, there is much interaction between the two fields. For example, the

700:

Many tasks that we would like to automate by using a computer are of question–answer type: we would like to ask a question and the computer should produce an answer. In

823:

The algorithm designer only chooses the computer program. All computers run the same program. The system must work correctly regardless of the structure of the network.

60:, and managing the independent failure of components. When a component of one system fails, the entire system does not fail. Examples of distributed systems vary from

3835:

838:

In the case of distributed algorithms, computational problems are typically related to graphs. Often the graph that describes the structure of the computer network

950:. The class NC can be defined equally well by using the PRAM formalism or Boolean circuits—PRAM machines can simulate Boolean circuits efficiently and vice versa.

455:

2926:

758:

The discussion below focuses on the case of multiple computers, although many of the issues are the same for concurrent processes running on a single computer.

3977:

2094:

2823:

1165:

in the general case, and naturally understanding the behaviour of a computer network is at least as hard as understanding the behaviour of one computer.

486:

the use of a communication network that connects several computers: for example, data produced in one physical location and required in another location.

969:

be the diameter of the network. On the one hand, any computable problem can be solved trivially in a synchronous distributed system in approximately 2

1248:

314:

was invented in the early 1970s. E-mail became the most successful application of ARPANET, and it is probably the earliest example of a large-scale

2977:

2840:

334:

330:

329:

The study of distributed computing became its own branch of computer science in the late 1970s and early 1980s. The first conference in the field,

99:

is the process of writing such programs. There are many different types of implementations for the message passing mechanism, including pure HTTP,

925:

for graph coloring was originally presented as a parallel algorithm, but the same technique can also be used directly as a distributed algorithm.

2472:

TULSIRAMJI GAIKWAD-PATIL College of

Engineering & Technology, Nagpur Department of Information Technology Introduction to Distributed Systems

1976:

117:, a problem is divided into many tasks, each of which is solved by one or more computers, which communicate with each other via message passing.

1022:

problems. In these problems, the distributed system is supposed to continuously coordinate the use of shared resources so that no conflicts or

4067:

783:

A model that is closer to the behavior of real-world multiprocessor machines and takes into account the use of machine instructions, such as

3919:

1921:

681:

are a set of principles and patterns which help to make your cloud native application as well as edge native applications more reactive.

3026:

2748:

2375:

3392:

2591:

2048:

2010:

3566:

1389:

1304:

3736:

3680:

3665:

3522:

1218:

80:. On the other hand, a well designed distributed system is more scalable, more durable, more changeable and more fine-tuned than a

489:

There are many cases in which the use of a single computer would be possible in principle, but the use of a distributed system is

4048:

3891:

2297:

Haussmann, J. (2019). "Cost-efficient parallel processing of irregularly structured problems in cloud computing environments".

592:

2904:

3632:

3546:

3333:

3305:

3279:

3249:

3219:

3200:

3173:

3147:

3111:

3085:

1533:

1427:

1112:

The definition of this problem is often attributed to LeLann, who formalized it as a method to create a new token in a token

4088:

3772:

4315:

1309:

655:

3802:

4338:

3319:

1253:

3853:

Rodriguez, Carlos; Villagra, Marcos; Baran, Benjamin (2007). "Asynchronous team algorithms for

Boolean Satisfiability".

4227:

1824:

1476:

884:

The main focus is on high-performance computation that exploits the processing power of multiple computers in parallel.

77:

52:

to one another in order to achieve a common goal. Three significant challenges of distributed systems are: maintaining

4083:

1105:

has been run, however, each node throughout the network recognizes a particular, unique node as the task coordinator.

4333:

4310:

3700:

3651:

3608:

3020:

2922:

2742:

2712:

2585:

2405:

2369:

2340:

2183:

2162:

2078:

2042:

2004:

1500:

1383:

770:

All processors have access to a shared memory. The algorithm designer chooses the program executed by each processor.

578:

140:

While there is no single definition of a distributed system, the following defining properties are commonly used as:

69:

318:. In addition to ARPANET (and its successor, the global Internet), other early worldwide computer networks included

3912:

188:

Each computer has only a limited, incomplete view of the system. Each computer may know only one part of the input.

716:

for each instance. Instances are questions that we can ask, and solutions are desired answers to these questions.

4305:

4120:

2109:

1329:

724:

719:

Theoretical computer science seeks to understand which computational problems can be solved by using a computer (

2792:

4676:

4412:

4326:

4275:

3729:

774:

53:

801:

The algorithm designer chooses the structure of the network, as well as the program executed by each computer.

357:

Whether these CPUs share resources or not determines a first distinction between three types of architecture:

4636:

4470:

4321:

4008:

2244:

Proceedings. IEEE INFOCOM'90: Ninth Annual Joint

Conference of the IEEE Computer and Communications Societies

1298:

1029:

There are also fundamental challenges that are unique to distributed computing, for example those related to

459:

61:

496:

It can allow for much larger storage and memory, faster compute, and higher bandwidth than a single machine.

1238:

1131:

1034:

827:

701:

337:(DISC) was first held in Ottawa in 1985 as the International Workshop on Distributed Algorithms on Graphs.

236:

Distributed systems are groups of networked computers which share a common goal for their work. The terms "

503:. Moreover, a distributed system may be easier to expand and manage than a monolithic uniprocessor system.

4655:

4601:

4061:

3905:

1344:

1023:

1015:

929:

same place as the boundary between parallel and distributed systems (shared memory vs. message passing).

463:

104:

777:(PRAM) that are used. However, the classical PRAM model assumes synchronous access to the shared memory.

4580:

4375:

4260:

4222:

4072:

3962:

3133:

3103:

1334:

1278:

922:

361:

1973:

4596:

4575:

4520:

4407:

4397:

4370:

4232:

3810:

2473:

1258:

1038:

751:

can be used as abstract models of a sequential general-purpose computer executing such an algorithm.

625:

215:

3464:

3411:

2883:

2140:

4550:

4176:

4115:

4028:

2155:

The Age of

Cryptocurrency: How Bitcoin and the Blockchain Are Challenging the Global Economic Order

1293:

1056:

1002:

748:

731:

that produces a correct solution for any given instance. Such an algorithm can be implemented as a

611:

distributed information processing systems such as banking systems and airline reservation systems;

366:

267:

The situation is further complicated by the traditional uses of the terms parallel and distributed

205:

3509:

727:). Traditionally, it is said that a problem can be solved by using a computer if we can design an

422:

clients can be used. This simplifies application deployment. Most web applications are three-tier.

4611:

4606:

4465:

4056:

3315:

1208:

962:

598:

551:

521:

Examples of distributed systems and applications of distributed computing include the following:

500:

315:

20:

791:. There is a wide body of work on this model, a summary of which can be found in the literature.

4350:

4282:

4186:

4078:

4033:

3504:

3459:

3406:

3289:

2878:

1914:

1233:

1083:

should be measured through "99th percentile" because "median" and "average" can be misleading.

910:; the computers must exchange messages with each other to discover more about the structure of

425:

415:

387:

383:

198:

81:

3008:

2732:

2357:

4442:

4402:

4355:

4345:

4140:

4003:

3432:

2834:

2573:

2127:

2032:

1994:

1339:

1213:

1080:

1072:

744:

705:

690:

661:

130:

100:

57:

3562:

2242:

Chiu, G (1990). "A model for optimal database allocation in distributed computing systems".

4382:

4270:

4265:

4255:

4242:

4038:

3620:

3125:

1373:

1243:

1203:

943:

831:

720:

639:

633:

588:

572:

351:

288:

The use of concurrent processes which communicate through message-passing has its roots in

237:

1001:

Another commonly used measure is the total number of bits transmitted in the network (cf.

8:

4545:

4500:

4300:

4166:

3365:(1986), "Deterministic coin tossing with applications to optimal parallel list ranking",

1599:

1582:

1162:

1101:

507:

347:

293:

134:

3745:

3677:

3659:

3624:

3489:

946:

by using a polynomial number of processors, then the problem is said to be in the class

137:

that run on the same physical computer and interact with each other by message passing.

4570:

4419:

4392:

4217:

4181:

4171:

4130:

3972:

3952:

3947:

3928:

3866:

3827:

3424:

3297:

3234:

3192:

3077:

3070:

2896:

2864:"A Modular Technique for the Design of Efficient Distributed Leader Finding Algorithms"

2815:

2788:

2314:

1042:

740:

525:

432:

419:

256:

241:

155:

3379:

1150:

a distributed system that solves a given problem. A complementary research problem is

4616:

4292:

4250:

4145:

3725:

3696:

3647:

3628:

3604:

3329:

3301:

3275:

3245:

3215:

3196:

3169:

3143:

3107:

3081:

3016:

2738:

2708:

2581:

2401:

2365:

2336:

2224:

2189:

2179:

2158:

2101:

2074:

2038:

2000:

1820:

1604:

1539:

1529:

1496:

1472:

1433:

1423:

1379:

1223:

643:

557:

531:

409:

379:

150:

3870:

3831:

2863:

2705:

Foundations of Data

Intensive Applications Large Scale Data Analytics Under the Hood

2318:

506:

It may be more cost-efficient to obtain the desired level of performance by using a

4626:

4425:

4360:

4207:

4023:

4018:

4013:

3982:

3858:

3819:

3798:

3514:

3485:

3469:

3428:

3416:

3374:

3184:

3121:

2900:

2888:

2819:

2807:

2306:

2216:

1594:

1521:

1415:

1288:

1109:

identities, and decide that the node with the highest identity is the coordinator.

1019:

917:

The main focus is on coordinating the operation of an arbitrary distributed system.

805:

784:

735:

that runs on a general-purpose computer: the program reads a problem instance from

732:

541:

535:

402:

326:

from the 1980s, both of which were used to support distributed discussion systems.

289:

88:

48:

The components of a distributed system communicate and coordinate their actions by

42:

30:

3542:

292:

architectures studied in the 1960s. The first widespread distributed systems were

113:

also refers to the use of distributed systems to solve computational problems. In

4490:

4430:

4365:

4212:

4202:

4135:

4125:

3967:

3957:

3862:

3782:

3776:

3684:

3397:

3271:

3259:

3241:

1980:

1525:

1419:

1228:

1170:

1158:

1087:

1066:

947:

906:. Initially, each computer only knows about its immediate neighbors in the graph

898:

is the structure of the computer network. There is one computer for each node of

809:

651:

582:

446:

378:

Distributed programming typically falls into one of several basic architectures:

178:

162:

49:

38:

1119:

Coordinator election algorithms are designed to be economical in terms of total

993:. Many distributed algorithms are known with the running time much smaller than

4621:

4437:

4094:

3987:

3716:

1314:

1273:

1124:

647:

568:

398:

371:

3614:

3518:

3339:

2787:

2310:

499:

It can provide more reliability than a non-distributed system, as there is no

4670:

4510:

4387:

3095:

2978:"How big data and distributed systems solve traditional scalability problems"

2947:

2193:

2105:

1608:

1543:

1437:

1263:

1198:

1062:

984:

On the other hand, if the running time of the algorithm is much smaller than

478:

Reasons for using distributed systems and distributed computing may include:

249:

210:

65:

3420:

510:

of several low-end computers, in comparison with a single high-end computer.

170:

the use of shared resources or provide communication services to the users.

4110:

3595:

3157:

2859:

2228:

1283:

1268:

1113:

669:

605:

438:

418:: architectures that move the client intelligence to a middle tier so that

394:

73:

3855:

2007 2nd Bio-Inspired Models of

Network, Information and Computing Systems

3823:

2811:

1996:

On the Way to the Web: The Secret

History of the Internet and its Founders

1493:

Monolith to Microservices Evolutionary Patterns to Transform Your Monolith

850:

Consider the computational problem of finding a coloring of a given graph

271:

that do not quite match the above definitions of parallel and distributed

4631:

3447:

3388:

3362:

3229:

2855:

1319:

1183:

736:

2892:

3263:

3129:

2362:

Parallel Computation Systems For Robotics: Algorithms And Architectures

1509:

462:

can enable distributed computing to be done without any form of direct

442:

259:). Information is exchanged by passing messages between the processors.

226:

173:

Other typical properties of distributed systems include the following:

129:

in terms such as "distributed system", "distributed programming", and "

2220:

1371:

4505:

4480:

3897:

3600:

Distributed Computing: Fundamentals, Simulations, and Advanced Topics

3481:

3165:

3139:

2769:

LeLann, G. (1977). "Distributed systems - toward a formal approach".

2574:"Trading Bit, Message, and Time Complexity of Distributed Algorithms"

2207:

Lind P, Alm M (2006), "A database-centric virtual chemistry system",

1324:

977:

rounds), solve the problem, and inform each node about the solution (

973:

communication rounds: simply gather all information in one location (

728:

255:

In distributed computing, each processor has its own private memory (

3738:

Operating Systems: Three Easy Pieces, Chapter 48 Distributed Systems

3473:

2173:

1403:

1130:

Many other algorithms were suggested for different kinds of network

842:

the problem instance. This is illustrated in the following example.

4555:

4535:

4460:

3072:

Foundations of Multithreaded, Parallel, and Distributed Programming

2355:

1188:

619:

545:

467:

307:

297:

1059:

can be used to run synchronous algorithms in asynchronous systems.

4560:

4540:

4515:

4150:

1349:

939:

323:

303:

3594:

4530:

4525:

3886:

2068:

319:

311:

1817:

Fundamentals of Software Architecture: An Engineering Approach

1469:

Fundamentals of Software Architecture: An Engineering Approach

41:

whose inter-communicating components are located on different

1193:

3796:

3013:

Randomness Through Computation: Some Answers, More Questions

2571:

1912:

1658:

1448:

1075:

algorithms provide globally consistent physical time stamps.

4565:

4495:

4485:

3617:

Introduction to Reliable and Secure Distributed Programming

3615:

Christian Cachin; Rachid Guerraoui; Luís Rodrigues (2011),

3325:

2793:"A Distributed Algorithm for Minimum-Weight Spanning Trees"

1120:

248:

In parallel computing, all processors may have access to a

2073:. Springer Science & Business Media. pp. 267–78.

739:, performs some computation, and produces the solution as

4475:

4452:

2580:. Springer Science & Business Media. pp. 51–65.

2176:

Peer-to-peer computing : principles and applications

854:. Different fields might take the following approaches:

2398:

Models of Computation: Exploring the Power of Computing

3852:

3643:

Distributed Systems: Concepts and Design (5th Edition)

3393:"Distributed computing column 32 – The year in review"

1913:

Bentaleb, A.; Yifan, L.; Xin, J.; et al. (2016).

3120:

2871:

ACM Transactions on Programming Languages and Systems

2800:

ACM Transactions on Programming Languages and Systems

2487:

2455:

2420:

1663:

1661:

914:. Each computer must produce its own color as output.

221:

144:

There are several autonomous computational entities (

3450:(1992), "Locality in distributed graph algorithms",

3321:

Distributed Computing: A Locality-Sensitive Approach

2358:"Neural Networks for Real-Time Robotic Applications"

1141:

3770:

1520:. London: Springer London. 2010. pp. 373–406.

1414:. London: Springer London. 2010. pp. 373–406.

1127:for an influential paper in distributed computing.

1048:Much research is also focused on understanding the

91:that runs within a distributed system is called a

3771:Keidar, Idit; Rajsbaum, Sergio, eds. (2000–2009),

3233:

3069:

2565:

1471:(1st ed.). O'Reilly Media. pp. 146–147.

961:This complexity measure is closely related to the

3803:"Grapevine: An exercise in distributed computing"

3640:

2948:"Major unsolved problems in distributed systems?"

2853:

2791:, P. A. Humblet, and P. M. Spira (January 1983).

2349:

2174:Quang Hieu Vu; Mihai Lupu; Beng Chin Ooi (2010).

1906:

1378:. Upper Saddle River, NJ: Pearson Prentice Hall.

1372:Tanenbaum, Andrew S.; Steen, Maarten van (2002).

1249:Edsger W. Dijkstra Prize in Distributed Computing

4668:

2726:

2724:

2356:Toomarian, N.B.; Barhen, J.; Gulati, S. (1992).

708:. Formally, a computational problem consists of

335:International Symposium on Distributed Computing

333:(PODC), dates back to 1982, and its counterpart

331:Symposium on Principles of Distributed Computing

310:, was introduced in the late 1960s, and ARPANET

1632:

1630:

1583:"Modern Messaging for Distributed Sytems (sic)"

818:Distributed algorithms in message-passing model

675:

3734:

3689:

3480:

3182:

3009:"Indeterminism and Randomness Through Physics"

2559:

2389:

2284:

2256:

2037:. Cambridge University Press. pp. 35–36.

1454:

1154:the properties of a given distributed system.

3913:

3797:Birrell, A. D.; Levin, R.; Schroeder, M. D.;

3678:Java Distributed Computing by Jim Faber, 1998

3288:

3258:

3212:Distributed Systems – An Algorithmic Approach

3046:

3000:

2721:

2527:

2515:

2432:

2147:

2095:"Real Time And Distributed Computing Systems"

1883:

1375:Distributed systems: principles and paradigms

3715:

3100:Computational Complexity – A Modern Approach

2839:: CS1 maint: multiple names: authors list (

2395:

2062:

1986:

1627:

902:and one communication link for each edge of

796:Parallel algorithms in message-passing model

276:

161:The entities communicate with each other by

3735:Dusseau, Remzi H.; Dusseau, Andrea (2016).

3360:

2483:

1367:

1365:

252:to exchange information between processors.

3920:

3906:

3094:

3006:

2737:. Packt Publishing Ltd. pp. 100–101.

2511:

2024:

942:). If a decision problem can be solved in

765:Parallel algorithms in shared-memory model

684:

3508:

3463:

3410:

3378:

2882:

2730:

2296:

1992:

1598:

1305:List of distributed computing conferences

1100:) is the process of designating a single

1362:

1219:Distributed algorithmic mechanism design

350:running on those CPUs with some sort of

225:

16:System with multiple networked computers

3641:Coulouris, George; et al. (2011),

3540:

3067:

2572:Schneider, J.; Wattenhofer, R. (2011).

2499:

2268:

2206:

2030:

1957:

1945:

1707:

1679:

1636:

1621:

1580:

1560:

1033:. Examples of related problems include

593:distributed database management systems

346:level, it is necessary to interconnect

4669:

3927:

3709:Introduction to Distributed Algorithms

3446:

3387:

2768:

2555:

2071:Applications of Evolutionary Computing

2034:Introduction to Distributed Algorithms

1887:

1843:

932:

482:The very nature of an application may

3901:

3657:

3563:"Ian Peter's History of the Internet"

3560:

3314:

3268:The Art of Multiprocessor Programming

3228:

3209:

3156:

2691:

2679:

2675:

2663:

2659:

2647:

2643:

2631:

2627:

2615:

2611:

2551:

2539:

2488:Cormen, Leiserson & Rivest (1990)

2468:

2466:

2464:

2456:Cormen, Leiserson & Rivest (1990)

2444:

2421:Cormen, Leiserson & Rivest (1990)

2280:

2276:

2272:

1969:

1915:"Parallel and Distributed Algorithms"

1871:

1859:

1855:

1839:

1803:

1799:

1795:

1783:

1779:

1767:

1763:

1759:

1747:

1743:

1731:

1727:

1715:

1711:

1695:

1691:

1687:

1683:

1667:

1652:

1640:

1587:Journal of Physics: Conference Series

1568:

1564:

3015:. World Scientific. pp. 112–3.

2958:from the original on 20 January 2023

2920:

2360:. In Fijany, A.; Bejczy, A. (eds.).

2241:

2178:. Heidelberg: Springer. p. 16.

2157:St. Martin's Press January 27, 2015

1920:. National University of Singapore.

1466:

1310:List of volunteer computing projects

761:Three viewpoints are commonly used:

493:for practical reasons. For example:

3706:

3543:"A primer on distributed computing"

1254:Federation (information technology)

300:, which was invented in the 1970s.

154:), each of which has its own local

13:

3582:

2988:from the original on 17 March 2018

2461:

1116:in which the token has been lost.

1079:Note that in distributed systems,

579:massively multiplayer online games

222:Parallel and distributed computing

78:fallacies of distributed computing

70:massively multiplayer online games

14:

4688:

3879:

3692:Elements of Distributed Computing

2364:. World Scientific. p. 214.

1900:

1142:Properties of distributed systems

1008:

306:, one of the predecessors of the

4650:

4649:

3885:

3841:from the original on 2016-07-30.

3189:Fundamentals of Database Systems

2910:from the original on 2007-04-18.

2829:from the original on 2017-09-26.

2333:Reactive Application Development

340:

201:used for distributed computing:

4121:Analysis of parallel algorithms

3668:from the original on 2010-08-24

3569:from the original on 2010-01-20

3549:from the original on 2021-05-13

3528:from the original on 2013-01-08

3490:"What can be computed locally?"

3040:

3029:from the original on 2020-08-01

2970:

2940:

2929:from the original on 2012-11-24

2914:

2847:

2781:

2777:: 155·160 – via Elsevier.

2762:

2751:from the original on 2023-01-20

2697:

2685:

2669:

2653:

2637:

2621:

2605:

2594:from the original on 2020-08-01

2545:

2533:

2521:

2505:

2493:

2477:

2449:

2438:

2426:

2414:

2400:. Addison Wesley. p. 209.

2378:from the original on 2020-08-01

2325:

2290:

2262:

2250:

2235:

2200:

2167:

2087:

2051:from the original on 2023-01-20

2013:from the original on 2023-01-20

1963:

1951:

1939:

1927:from the original on 2017-03-26

1893:

1877:

1865:

1849:

1833:

1809:

1789:

1773:

1753:

1737:

1721:

1701:

1673:

1646:

1392:from the original on 2020-08-12

1330:Parallel distributed processing

1052:nature of distributed systems:

775:parallel random-access machines

725:computational complexity theory

473:

460:"database-centric" architecture

458:relationship. Alternatively, a

230:(a), (b): a distributed system.

120:

3773:"Distributed computing column"

1615:

1600:10.1088/1742-6596/608/1/012038

1574:

1554:

1485:

1460:

84:deployed on a single machine.

56:of components, overcoming the

1:

4068:Simultaneous and heterogenous

3380:10.1016/S0019-9958(86)80023-7

3055:

1299:Library Oriented Architecture

1146:So far the focus has been on

845:

773:One theoretical model is the

4656:Category: Parallel computing

3863:10.1109/BIMNICS.2007.4610083

3711:, Cambridge University Press

3068:Andrews, Gregory R. (2000),

2560:Naor & Stockmeyer (1995)

2299:Journal of Cluster Computing

2285:Elmasri & Navathe (2000)

2257:Elmasri & Navathe (2000)

1526:10.1007/978-1-84882-745-5_11

1467:Ford, Neal (March 3, 2020).

1420:10.1007/978-1-84882-745-5_11

1239:Distributed operating system

702:theoretical computer science

676:Reactive distributed systems

656:volunteer computing projects

7:

3598:and Jennifer Welch (2004),

2734:Apache ZooKeeper Essentials

2433:Herlihy & Shavit (2008)

1345:Shared nothing architecture

1176:

1086:

1016:dining philosophers problem

826:A commonly used model is a

616:real-time process control:

516:

464:inter-process communication

192:

10:

4693:

3963:High-performance computing

3661:Java Distributed Computing

3290:Papadimitriou, Christos H.

3214:, Chapman & Hall/CRC,

3135:Introduction to Algorithms

2952:cstheory.stackexchange.com

1455:Dusseau & Dusseau 2016

1335:Parallel programming model

1169:deadlock. This problem is

789:asynchronous shared memory

688:

626:industrial control systems

604:distributed cache such as

283:

18:

4645:

4597:Automatic parallelization

4589:

4451:

4291:

4241:

4233:Application checkpointing

4195:

4159:

4103:

4047:

3996:

3935:

3811:Communications of the ACM

3619:(2. ed.), Springer,

3519:10.1137/S0097539793254571

3497:SIAM Journal on Computing

3452:SIAM Journal on Computing

2484:Cole & Vishkin (1986)

2311:10.1007/s10586-018-2879-3

1999:. Apress. pp. 44–5.

1518:Texts in Computer Science

1412:Texts in Computer Science

1259:Flat neighborhood network

1039:Byzantine fault tolerance

749:universal Turing machines

695:

216:Event driven architecture

74:peer-to-peer applications

3294:Computational Complexity

2923:"Distributed Algorithms"

2512:Arora & Barak (2009)

1819:. O'Reilly Media. 2020.

1516:"Distributed Programs".

1410:"Distributed Programs".

1356:

1294:Layered queueing network

1003:communication complexity

704:, such tasks are called

552:wireless sensor networks

466:, by utilizing a shared

206:Saga interaction pattern

181:in individual computers.

19:Not to be confused with

4612:Embarrassingly parallel

4607:Deterministic algorithm

3721:Parallel Program Design

3690:Garg, Vijay K. (2002),

3421:10.1145/1466390.1466402

3367:Information and Control

3210:Ghosh, Sukumar (2007),

3011:. In Hector, Z. (ed.).

1209:Decentralized computing

723:) and how efficiently (

685:Theoretical foundations

501:single point of failure

316:distributed application

232:(c): a parallel system.

97:distributed programming

21:Decentralized computing

4327:Associative processing

4283:Non-blocking algorithm

4089:Clustered multi-thread

3719:; et al. (1988),

3541:Godfrey, Bill (2002).

3236:Distributed Algorithms

3098:; Barak, Boaz (2009),

2771:Information Processing

2576:. In Peleg, D. (ed.).

2554:, Sections 2.3 and 7.

2135:Cite journal requires

1234:Distributed networking

923:Cole–Vishkin algorithm

889:Distributed algorithms

858:Centralized algorithms

745:random-access machines

706:computational problems

565:network applications:

233:

199:architectural patterns

82:monolithic application

58:lack of a global clock

4677:Distributed computing

4443:Hardware acceleration

4356:Superscalar processor

4346:Dataflow architecture

3943:Distributed computing

3892:Distributed computing

3824:10.1145/358468.358487

3126:Leiserson, Charles E.

2812:10.1145/357195.357200

2578:Distributed Computing

2458:, Sections 28 and 29.

2396:Savage, J.E. (1998).

1549:by any other process.

1340:Plan 9 from Bell Labs

1214:Distributed algorithm

1073:Clock synchronization

991:local D-neighbourhood

743:. Formalisms such as

691:Distributed algorithm

664:in computer graphics.

662:distributed rendering

589:distributed databases

573:peer-to-peer networks

229:

131:distributed algorithm

115:distributed computing

111:Distributed computing

27:Distributed computing

4322:Pipelined processing

4271:Explicit parallelism

4266:Implicit parallelism

4256:Dataflow programming

3894:at Wikimedia Commons

3707:Tel, Gerard (1994),

3602:, Wiley-Interscience

3185:Navathe, Shamkant B.

3047:Papadimitriou (1994)

2528:Papadimitriou (1994)

2516:Papadimitriou (1994)

1974:The history of email

1884:Papadimitriou (1994)

1581:Magnoni, L. (2015).

1244:Eventual consistency

1204:Dataflow programming

1094:Coordinator election

965:of the network. Let

944:polylogarithmic time

832:finite-state machine

721:computability theory

640:scientific computing

634:parallel computation

599:network file systems

352:communication system

238:concurrent computing

4546:Parallel Extensions

4351:Pipelined processor

3658:Faber, Jim (1998),

3625:2011itra.book.....C

3561:Peter, Ian (2004).

3260:Herlihy, Maurice P.

3007:Svozil, K. (2011).

2893:10.1145/77606.77610

2682:, Sections 6.2–6.3.

2153:Vigna P, Casey MJ.

1069:ordering of events.

956:communication round

933:Complexity measures

872:Parallel algorithms

433:application servers

294:local-area networks

93:distributed program

43:networked computers

35:distributed systems

4420:Massively parallel

4398:distributed shared

4218:Cache invalidation

4182:Instruction window

3973:Manycore processor

3953:Massively parallel

3948:Parallel computing

3929:Parallel computing

3857:. pp. 66–69.

3694:, Wiley-IEEE Press

3683:2010-08-24 at the

3162:Self-Stabilization

2921:Hamilton, Howard.

2731:Haloi, S. (2015).

1993:Banks, M. (2012).

1979:2009-04-15 at the

1899:See references in

1495:. O'Reilly Media.

1138:algorithms exist.

1043:self-stabilisation

1035:consensus problems

1018:and other similar

787:(CAS), is that of

558:routing algorithms

532:telephone networks

526:telecommunications

257:distributed memory

242:parallel computing

234:

177:The system has to

4664:

4663:

4617:Parallel slowdown

4251:Stream processing

4141:Karp–Flatt metric

3890:Media related to

3847:Conference Papers

3751:on 31 August 2021

3634:978-3-642-15259-7

3486:Stockmeyer, Larry

3335:978-0-89871-464-7

3307:978-0-201-53082-7

3281:978-0-12-370591-4

3251:978-1-55860-348-6

3221:978-1-58488-564-1

3202:978-0-201-54263-9

3175:978-0-262-04178-2

3149:978-0-262-03141-7

3130:Rivest, Ronald L.

3122:Cormen, Thomas H.

3113:978-0-521-42426-4

3087:978-0-201-35752-3

2982:theserverside.com

2854:Korach, Ephraim;

2335:. Manning. 2018.

2259:, Section 24.1.2.

2221:10.1021/ci050360b

1535:978-1-84882-744-8

1429:978-1-84882-744-8

1224:Distributed cache

1065:provide a causal

877:Again, the graph

644:cluster computing

542:computer networks

536:cellular networks

397:; or categories:

179:tolerate failures

62:SOA-based systems

4684:

4653:

4652:

4627:Software lockout

4426:Computer cluster

4361:Vector processor

4316:Array processing

4301:Flynn's taxonomy

4208:Memory coherence

3983:Computer network

3922:

3915:

3908:

3899:

3898:

3889:

3874:

3842:

3840:

3807:

3792:

3791:

3790:

3781:, archived from

3760:

3758:

3756:

3750:

3744:. Archived from

3743:

3724:

3723:, Addison-Wesley

3712:

3695:

3675:

3674:

3673:

3646:

3645:, Addison-Wesley

3637:

3603:

3577:

3575:

3574:

3557:

3555:

3554:

3529:

3527:

3512:

3503:(6): 1259–1277,

3494:

3476:

3467:

3442:

3441:

3440:

3431:, archived from

3414:

3383:

3382:

3349:

3348:

3347:

3338:, archived from

3310:

3284:

3254:

3239:

3224:

3205:

3191:(3rd ed.),

3183:Elmasri, Ramez;

3178:

3152:

3138:(1st ed.),

3116:

3090:

3075:

3050:

3044:

3038:

3037:

3035:

3034:

3004:

2998:

2997:

2995:

2993:

2974:

2968:

2967:

2965:

2963:

2944:

2938:

2937:

2935:

2934:

2918:

2912:

2911:

2909:

2886:

2868:

2851:

2845:

2844:

2838:

2830:

2828:

2797:

2785:

2779:

2778:

2766:

2760:

2759:

2757:

2756:

2728:

2719:

2718:

2701:

2695:

2689:

2683:

2673:

2667:

2657:

2651:

2641:

2635:

2625:

2619:

2614:, Sections 5–7.

2609:

2603:

2602:

2600:

2599:

2569:

2563:

2549:

2543:

2537:

2531:

2525:

2519:

2509:

2503:

2497:

2491:

2481:

2475:

2470:

2459:

2453:

2447:

2442:

2436:

2430:

2424:

2418:

2412:

2411:

2393:

2387:

2386:

2384:

2383:

2353:

2347:

2346:

2329:

2323:

2322:

2294:

2288:

2266:

2260:

2254:

2248:

2247:

2239:

2233:

2232:

2209:J Chem Inf Model

2204:

2198:

2197:

2171:

2165:

2151:

2145:

2144:

2138:

2133:

2131:

2123:

2121:

2120:

2114:

2108:. Archived from

2099:

2091:

2085:

2084:

2066:

2060:

2059:

2057:

2056:

2031:Tel, G. (2000).

2028:

2022:

2021:

2019:

2018:

1990:

1984:

1967:

1961:

1955:

1949:

1943:

1937:

1936:

1934:

1932:

1926:

1919:

1910:

1904:

1897:

1891:

1881:

1875:

1869:

1863:

1853:

1847:

1837:

1831:

1830:

1813:

1807:

1793:

1787:

1777:

1771:

1757:

1751:

1741:

1735:

1725:

1719:

1705:

1699:

1682:, pp. 8–9, 291.

1677:

1671:

1665:

1656:

1650:

1644:

1634:

1625:

1619:

1613:

1612:

1602:

1578:

1572:

1558:

1552:

1551:

1513:

1507:

1506:

1489:

1483:

1482:

1464:

1458:

1452:

1446:

1445:

1407:

1401:

1400:

1398:

1397:

1369:

1289:Jungle computing

1020:mutual exclusion

810:sorting networks

806:Boolean circuits

785:Compare-and-swap

733:computer program

712:together with a

622:control systems,

290:operating system

197:Here are common

89:computer program

50:passing messages

39:computer systems

31:computer science

4692:

4691:

4687:

4686:

4685:

4683:

4682:

4681:

4667:

4666:

4665:

4660:

4641:

4585:

4491:Coarray Fortran

4447:

4431:Beowulf cluster

4287:

4237:

4228:Synchronization

4213:Cache coherence

4203:Multiprocessing

4191:

4155:

4136:Cost efficiency

4131:Gustafson's law

4099:

4043:

3992:

3968:Multiprocessing

3958:Cloud computing

3931:

3926:

3882:

3877:

3838:

3805:

3788:

3786:

3778:ACM SIGACT News

3754:

3752:

3748:

3741:

3685:Wayback Machine

3671:

3669:

3635:

3585:

3583:Further reading

3580:

3572:

3570:

3552:

3550:

3525:

3492:

3474:10.1137/0221015

3465:10.1.1.471.6378

3438:

3436:

3412:10.1.1.116.1285

3398:ACM SIGACT News

3361:Cole, Richard;

3345:

3343:

3336:

3308:

3282:

3272:Morgan Kaufmann

3252:

3242:Morgan Kaufmann

3230:Lynch, Nancy A.

3222:

3203:

3176:

3150:

3114:

3088:

3058:

3053:

3049:, Section 19.3.

3045:

3041:

3032:

3030:

3023:

3005:

3001:

2991:

2989:

2976:

2975:

2971:

2961:

2959:

2946:

2945:

2941:

2932:

2930:

2919:

2915:

2907:

2884:10.1.1.139.7342

2866:

2852:

2848:

2832:

2831:

2826:

2795:

2786:

2782:

2767:

2763:

2754:

2752:

2745:

2729:

2722:

2715:

2703:

2702:

2698:

2690:

2686:

2674:

2670:

2658:

2654:

2642:

2638:

2626:

2622:

2610:

2606:

2597:

2595:

2588:

2570:

2566:

2550:

2546:

2538:

2534:

2530:, Section 15.2.

2526:

2522:

2518:, Section 15.3.

2514:, Section 6.7.

2510:

2506:

2498:

2494:

2490:, Section 30.5.

2482:

2478:

2471:

2462:

2454:

2450:

2443:

2439:

2435:, Chapters 2–6.

2431:

2427:

2419:

2415:

2408:

2394:

2390:

2381:

2379:

2372:

2354:

2350:

2343:

2331:

2330:

2326:

2295:

2291:

2267:

2263:

2255:

2251:

2240:

2236:

2205:

2201:

2186:

2172:

2168:

2152:

2148:

2136:

2134:

2125:

2124:

2118:

2116:

2112:

2097:

2093:

2092:

2088:

2081:

2067:

2063:

2054:

2052:

2045:

2029:

2025:

2016:

2014:

2007:

1991:

1987:

1981:Wayback Machine

1968:

1964:

1956:

1952:

1944:

1940:

1930:

1928:

1924:

1917:

1911:

1907:

1898:

1894:

1882:

1878:

1870:

1866:

1858:, p. xix, 1–2.

1854:

1850:

1838:

1834:

1827:

1815:

1814:

1810:

1794:

1790:

1778:

1774:

1758:

1754:

1742:

1738:

1726:

1722:

1706:

1702:

1678:

1674:

1666:

1659:

1651:

1647:

1635:

1628:

1620:

1616:

1579:

1575:

1559:

1555:

1536:

1515:

1514:

1510:

1503:

1491:

1490:

1486:

1479:

1465:

1461:

1453:

1449:

1430:

1409:

1408:

1404:

1395:

1393:

1386:

1370:

1363:

1359:

1354:

1229:Distributed GIS

1179:

1171:PSPACE-complete

1159:halting problem

1144:

1098:leader election

1091:

1067:happened-before

1031:fault-tolerance

1011:

935:

848:

804:Models such as

698:

693:

687:

678:

652:cloud computing

583:virtual reality

519:

476:

447:bitcoin network

343:

286:

231:

224:

195:

163:message passing

123:

103:connectors and

24:

17:

12:

11:

5:

4690:

4680:

4679:

4662:

4661:

4659:

4658:

4646:

4643:

4642:

4640:

4639:

4634:

4629:

4624:

4622:Race condition

4619:

4614:

4609:

4604:

4599:

4593:

4591:

4587:

4586:

4584:

4583:

4578:

4573:

4568:

4563:

4558:

4553:

4548:

4543:

4538:

4533:

4528:

4523:

4518:

4513:

4508:

4503:

4498:

4493:

4488:

4483:

4478:

4473:

4468:

4463:

4457:

4455:

4449:

4448:

4446:

4445:

4440:

4435:

4434:

4433:

4423:

4417:

4416:

4415:

4410:

4405:

4400:

4395:

4390:

4380:

4379:

4378:

4373:

4366:Multiprocessor

4363:

4358:

4353:

4348:

4343:

4342:

4341:

4336:

4331:

4330:

4329:

4324:

4319:

4308:

4297:

4295:

4289:

4288:

4286:

4285:

4280:

4279:

4278:

4273:

4268:

4258:

4253:

4247:

4245:

4239:

4238:

4236:

4235:

4230:

4225:

4220:

4215:

4210:

4205:

4199:

4197:

4193:

4192:

4190:

4189:

4184:

4179:

4174:

4169:

4163:

4161:

4157:

4156:

4154:

4153:

4148:

4143:

4138:

4133:

4128:

4123:

4118:

4113:

4107:

4105:

4101:

4100:

4098:

4097:

4095:Hardware scout

4092:

4086:

4081:

4076:

4070:

4065:

4059:

4053:

4051:

4049:Multithreading

4045:

4044:

4042:

4041:

4036:

4031:

4026:

4021:

4016:

4011:

4006:

4000:

3998:

3994:

3993:

3991:

3990:

3988:Systolic array

3985:

3980:

3975:

3970:

3965:

3960:

3955:

3950:

3945:

3939:

3937:

3933:

3932:

3925:

3924:

3917:

3910:

3902:

3896:

3895:

3881:

3880:External links

3878:

3876:

3875:

3849:

3848:

3844:

3843:

3818:(4): 260–274.

3801:(April 1982).

3799:Needham, R. M.

3794:

3767:

3766:

3762:

3761:

3732:

3713:

3704:

3687:

3655:

3638:

3633:

3612:

3591:

3590:

3586:

3584:

3581:

3579:

3578:

3558:

3537:

3536:

3532:

3531:

3478:

3458:(1): 193–201,

3448:Linial, Nathan

3444:

3385:

3357:

3356:

3352:

3351:

3334:

3312:

3306:

3298:Addison–Wesley

3286:

3280:

3264:Shavit, Nir N.

3256:

3250:

3226:

3220:

3207:

3201:

3193:Addison–Wesley

3180:

3174:

3154:

3148:

3118:

3112:

3096:Arora, Sanjeev

3092:

3086:

3078:Addison–Wesley

3064:

3063:

3059:

3057:

3054:

3052:

3051:

3039:

3021:

2999:

2969:

2939:

2913:

2846:

2789:R. G. Gallager

2780:

2761:

2743:

2720:

2713:

2696:

2694:, Section 6.4.

2684:

2678:, Section 18.

2668:

2662:, Section 16.

2652:

2636:

2620:

2604:

2586:

2564:

2544:

2532:

2520:

2504:

2500:Andrews (2000)

2492:

2476:

2460:

2448:

2437:

2425:

2413:

2406:

2388:

2370:

2348:

2341:

2324:

2305:(3): 887–909.

2289:

2269:Andrews (2000)

2261:

2249:

2234:

2199:

2184:

2166:

2146:

2137:|journal=

2086:

2079:

2061:

2043:

2023:

2005:

1985:

1962:

1958:Andrews (2000)

1950:

1946:Andrews (2000)

1938:

1905:

1892:

1886:, Chapter 15.

1876:

1864:

1848:

1832:

1826:978-1492043454

1825:

1808:

1788:

1772:

1752:

1736:

1720:

1708:Andrews (2000)

1700:

1680:Andrews (2000)

1672:

1657:

1645:

1639:, p. 291–292.

1637:Andrews (2000)

1626:

1622:Godfrey (2002)

1614:

1573:

1561:Andrews (2000)

1553:

1534:

1508:

1501:

1484:

1478:978-1492043454

1477:

1459:

1457:, p. 1–2.

1447:

1428:

1402:

1384:

1360:

1358:

1355:

1353:

1352:

1347:

1342:

1337:

1332:

1327:

1322:

1317:

1315:Model checking

1312:

1307:

1302:

1296:

1291:

1286:

1281:

1276:

1274:Grid computing

1271:

1266:

1261:

1256:

1251:

1246:

1241:

1236:

1231:

1226:

1221:

1216:

1211:

1206:

1201:

1196:

1191:

1186:

1180:

1178:

1175:

1143:

1140:

1125:Dijkstra Prize

1090:

1085:

1077:

1076:

1070:

1063:Logical clocks

1060:

1010:

1009:Other problems

1007:

934:

931:

919:

918:

915:

891:

890:

886:

885:

882:

874:

873:

869:

868:

860:

859:

847:

844:

836:

835:

824:

820:

819:

815:

814:

802:

798:

797:

793:

792:

781:

778:

771:

767:

766:

697:

694:

689:Main article:

686:

683:

677:

674:

673:

672:

667:

666:

665:

659:

654:, and various

648:grid computing

631:

630:

629:

623:

614:

613:

612:

609:

602:

596:

586:

576:

569:World Wide Web

563:

562:

561:

555:

549:

539:

518:

515:

514:

513:

512:

511:

504:

497:

487:

475:

472:

451:

450:

436:

423:

413:

403:tight coupling

399:loose coupling

376:

375:

372:Shared nothing

369:

364:

342:

339:

285:

282:

261:

260:

253:

223:

220:

219:

218:

213:

208:

194:

191:

190:

189:

186:

182:

167:

166:

159:

122:

119:

105:message queues

29:is a field of

15:

9:

6:

4:

3:

2:

4689:

4678:

4675:

4674:

4672:

4657:

4648:

4647:

4644:

4638:

4635:

4633:

4630:

4628:

4625:

4623:

4620:

4618:

4615:

4613:

4610:

4608:

4605:

4603:

4600:

4598:

4595:

4594:

4592:

4588:

4582:

4579:

4577:

4574:

4572:

4569:

4567:

4564:

4562:

4559:

4557:

4554:

4552:

4549:

4547:

4544:

4542:

4539:

4537:

4534:

4532:

4529:

4527:

4524:

4522:

4519:

4517:

4514:

4512:

4511:Global Arrays

4509:

4507:

4504:

4502:

4499:

4497:

4494:

4492:

4489:

4487:

4484:

4482:

4479:

4477:

4474:

4472:

4469:

4467:

4464:

4462:

4459:

4458:

4456:

4454:

4450:

4444:

4441:

4439:

4438:Grid computer

4436:

4432:

4429:

4428:

4427:

4424:

4421:

4418:

4414:

4411:

4409:

4406:

4404:

4401:

4399:

4396:

4394:

4391:

4389:

4386:

4385:

4384:

4381:

4377:

4374:

4372:

4369:

4368:

4367:

4364:

4362:

4359:

4357:

4354:

4352:

4349:

4347:

4344:

4340:

4337:

4335:

4332:

4328:

4325:

4323:

4320:

4317:

4314:

4313:

4312:

4309:

4307:

4304:

4303:

4302:

4299:

4298:

4296:

4294:

4290:

4284:

4281:

4277:

4274:

4272:

4269:

4267:

4264:

4263:

4262:

4259:

4257:

4254:

4252:

4249:

4248:

4246:

4244:

4240:

4234:

4231:

4229:

4226:

4224:

4221:

4219:

4216:

4214:

4211:

4209:

4206:

4204:

4201:

4200:

4198:

4194:

4188:

4185:

4183:

4180:

4178:

4175:

4173:

4170:

4168:

4165:

4164:

4162:

4158:

4152:

4149:

4147:

4144:

4142:

4139:

4137:

4134:

4132:

4129:

4127:

4124:

4122:

4119:

4117:

4114:

4112:

4109:

4108:

4106:

4102:

4096:

4093:

4090:

4087:

4085:

4082:

4080:

4077:

4074:

4071:

4069:

4066:

4063:

4060:

4058:

4055:

4054:

4052:

4050:

4046:

4040:

4037:

4035:

4032:

4030:

4027:

4025:

4022:

4020:

4017:

4015:

4012:

4010:

4007:

4005:

4002:

4001:

3999:

3995:

3989:

3986:

3984:

3981:

3979:

3976:

3974:

3971:

3969:

3966:

3964:

3961:

3959:

3956:

3954:

3951:

3949:

3946:

3944:

3941:

3940:

3938:

3934:

3930:

3923:

3918:

3916:

3911:

3909:

3904:

3903:

3900:

3893:

3888:

3884:

3883:

3872:

3868:

3864:

3860:

3856:

3851:

3850:

3846:

3845:

3837:

3833:

3829:

3825:

3821:

3817:

3813:

3812:

3804:

3800:

3795:

3785:on 2014-01-16

3784:

3780:

3779:

3774:

3769:

3768:

3764:

3763:

3747:

3740:

3739:

3733:

3731:

3727:

3722:

3718:

3714:

3710:

3705:

3702:

3701:0-471-03600-5

3698:

3693:

3688:

3686:

3682:

3679:

3667:

3663:

3662:

3656:

3653:

3652:0-132-14301-1

3649:

3644:

3639:

3636:

3630:

3626:

3622:

3618:

3613:

3610:

3609:0-471-45324-2

3606:

3601:

3597:

3596:Attiya, Hagit

3593:

3592:

3588:

3587:

3568:

3564:

3559:

3548:

3544:

3539:

3538:

3534:

3533:

3524:

3520:

3516:

3511:

3510:10.1.1.29.669

3506:

3502:

3498:

3491:

3487:

3483:

3479:

3475:

3471:

3466:

3461:

3457:

3453:

3449:

3445:

3435:on 2014-01-16

3434:

3430:

3426:

3422:

3418:

3413:

3408:

3404:

3400:

3399:

3394:

3390:

3386:

3381:

3376:

3372:

3368:

3364:

3359:

3358:

3354:

3353:

3342:on 2009-08-06

3341:

3337:

3331:

3327:

3323:

3322:

3317:

3313:

3309:

3303:

3299:

3295:

3291:

3287:

3283:

3277:

3273:

3269:

3265:

3261:

3257:

3253:

3247:

3243:

3238:

3237:

3231:

3227:

3223:

3217:

3213:

3208:

3204:

3198:

3194:

3190:

3186:

3181:

3177:

3171:

3167:

3163:

3159:

3158:Dolev, Shlomi

3155:

3151:

3145:

3141:

3137:

3136:

3131:

3127:

3123:

3119:

3115:

3109:

3105:

3101:

3097:

3093:

3089:

3083:

3079:

3074:

3073:

3066:

3065:

3061:

3060:

3048:

3043:

3028:

3024:

3022:9789814462631

3018:

3014:

3010:

3003:

2987:

2983:

2979:

2973:

2957:

2953:

2949:

2943:

2928:

2924:

2917:

2906:

2902:

2898:

2894:

2890:

2885:

2880:

2877:(1): 84–101.

2876:

2872:

2865:

2861:

2860:Moran, Shlomo

2857:

2850:

2842:

2836:

2825:

2821:

2817:

2813:

2809:

2805:

2801:

2794:

2790:

2784:

2776:

2772:

2765:

2750:

2746:

2744:9781784398323

2740:

2736:

2735:

2727:

2725:

2716:

2714:9781119713012

2710:

2706:

2700:

2693:

2688:

2681:

2677:

2672:

2665:

2661:

2656:

2650:, Chapter 17.

2649:

2645:

2640:

2634:, p. 192–193.

2633:

2630:, p. 99–102.

2629:

2624:

2618:, Chapter 13.

2617:

2613:

2608:

2593:

2589:

2587:9783642240997

2583:

2579:

2575:

2568:

2561:

2557:

2556:Linial (1992)

2553:

2548:

2541:

2536:

2529:

2524:

2517:

2513:

2508:

2501:

2496:

2489:

2485:

2480:

2474:

2469:

2467:

2465:

2457:

2452:

2446:

2441:

2434:

2429:

2423:, Section 30.

2422:

2417:

2409:

2407:9780201895391

2403:

2399:

2392:

2377:

2373:

2371:9789814506175

2367:

2363:

2359:

2352:

2344:

2342:9781638355816

2338:

2334:

2328:

2320:

2316:

2312:

2308:

2304:

2300:

2293:

2287:, Section 24.

2286:

2282:

2279:, p. xix, 1.

2278:

2274:

2270:

2265:

2258:

2253:

2245:

2238:

2230:

2226:

2222:

2218:

2215:(3): 1034–9,

2214:

2210:

2203:

2195:

2191:

2187:

2185:9783642035135

2181:

2177:

2170:

2164:

2163:9781250065636

2160:

2156:

2150:

2142:

2129:

2115:on 2017-01-10

2111:

2107:

2103:

2096:

2090:

2082:

2080:9783540332374

2076:

2072:

2065:

2050:

2046:

2044:9780521794831

2040:

2036:

2035:

2027:

2012:

2008:

2006:9781430250746

2002:

1998:

1997:

1989:

1982:

1978:

1975:

1971:

1966:

1959:

1954:

1947:

1942:

1923:

1916:

1909:

1902:

1896:

1889:

1888:Keidar (2008)

1885:

1880:

1873:

1868:

1861:

1857:

1852:

1845:

1844:Keidar (2008)

1841:

1836:

1828:

1822:

1818:

1812:

1805:

1802:, p. xix, 2.

1801:

1797:

1792:

1785:

1781:

1776:

1769:

1765:

1761:

1756:

1749:

1745:

1740:

1733:

1729:

1724:

1717:

1713:

1709:

1704:

1697:

1694:, p. xix, 1.

1693:

1689:

1685:

1681:

1676:

1669:

1664:

1662:

1654:

1649:

1642:

1638:

1633:

1631:

1623:

1618:

1610:

1606:

1601:

1596:

1593:(1): 012038.

1592:

1588:

1584:

1577:

1570:

1566:

1562:

1557:

1550:

1545:

1541:

1537:

1531:

1527:

1523:

1519:

1512:

1504:

1502:9781492047810

1498:

1494:

1488:

1480:

1474:

1470:

1463:

1456:

1451:

1444:

1439:

1435:

1431:

1425:

1421:

1417:

1413:

1406:

1391:

1387:

1385:0-13-088893-1

1381:

1377:

1376:

1368:

1366:

1361:

1351:

1348:

1346:

1343:

1341:

1338:

1336:

1333:

1331:

1328:

1326:

1323:

1321:

1318:

1316:

1313:

1311:

1308:

1306:

1303:

1300:

1297:

1295:

1292:

1290:

1287:

1285:

1282:

1280:

1277:

1275:

1272:

1270:

1267:

1265:

1264:Fog computing

1262:

1260:

1257:

1255:

1252:

1250:

1247:

1245:

1242:

1240:

1237:

1235:

1232:

1230:

1227:

1225:

1222:

1220:

1217:

1215:

1212:

1210:

1207:

1205:

1202:

1200:

1199:Code mobility

1197:

1195:

1192:

1190:

1187:

1185:

1182:

1181:

1174:

1172:

1166:

1164:

1160:

1155:

1153:

1149:

1139:

1135:

1133:

1128:

1126:

1122:

1117:

1115:

1110:

1106:

1103:

1099:

1095:

1089:

1084:

1082:

1074:

1071:

1068:

1064:

1061:

1058:

1057:Synchronizers

1055:

1054:

1053:

1051:

1046:

1044:

1040:

1036:

1032:

1027:

1025:

1021:

1017:

1006:

1004:

999:

996:

992:

987:

982:

980:

976:

972:

968:

964:

959:

957:

951:

949:

945:

941:

930:

926:

924:

916:

913:

909:

905:

901:

897:

893:

892:

888:

887:

883:

880:

876:

875:

871:

870:

866:

862:

861:

857:

856:

855:

853:

843:

841:

833:

829:

825:

822:

821:

817:

816:

811:

807:

803:

800:

799:

795:

794:

790:

786:

782:

779:

776:

772:

769:

768:

764:

763:

762:

759:

756:

752:

750:

746:

742:

738:

734:

730:

726:

722:

717:

715:

711:

707:

703:

692:

682:

671:

668:

663:

660:

657:

653:

649:

645:

641:

638:

637:

635:

632:

627:

624: